blog

|

May 31, 2023

How Autonomous Vehicles Work: the Self-Driving Stack

To celebrate Autonomous Vehicle Day, we’re going back to basics and looking at the foundational technology that makes autonomous driving possible.

Mobileye develops a full range of technologies to enable autonomous driving on all road types, from highways to city streets.

In one of the most popular of our unedited self-driving videos, an autonomous vehicle (AV) takes on the task of driving through one of the most challenging urban driving environments for any driver—human or otherwise: Jerusalem.

Jerusalem is a 5,000-year-old labyrinth of unplanned ancient pathways that have transformed into modern roadways. Traffic can be unpredictable and intense, presenting a completely different driving experience from the planned organization of a modern city.

Autonomous Vehicle Day offers a perfect occasion to both appreciate and examine the enormity of the technological achievement involved in driving autonomously in such a difficult environment.

The “Brain” of a Self-Driving Car

“A self-driving car must work flawlessly and be able to navigate through obstacles and other road users. To do that, it needs a very smart brain,” explains Mobileye’s Chief Technology Officer, Prof. Shai Shalev-Shwartz.

That “smart brain” in an AV, consisting of software running on powerful microprocessors, is called the “self-driving stack”. This is what allows an AV to successfully complete such delicate maneuvers as making natural unprotected left turns and veering slightly to avoid an open door (to name just a couple).

Just as we can divide the human brain into various functional layers, we can also divide the brain of a self-driving car into the following functional layers:

- Sensing

- Perception

- Localization

- Planning

- Control

How a Self-Driving Car Senses

It Starts with a Camera

Self-driving cars are equipped with a variety of sensors that serve as the “eyes” of the vehicle and comprise the sensing layer. Not surprisingly, the main sensors that AVs use are the sensors that are most similar to the eyes of a human driver—cameras.

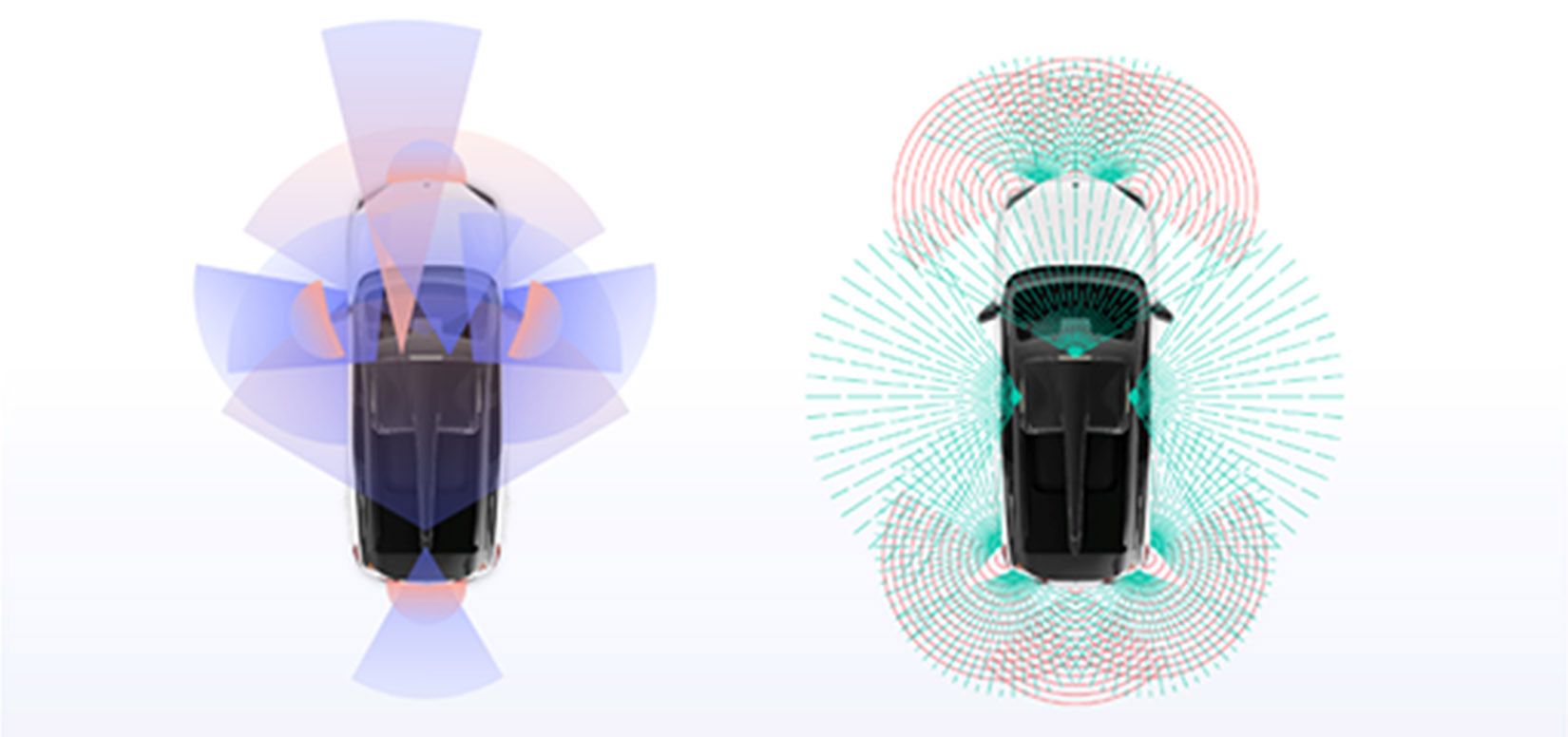

Cameras of various resolutions, sizes, and angles are typically mounted on the windshield, bumpers, and side mirrors. Working together, the cameras are able to capture a 360° surround view of the vehicle.

Cameras are superior to other sensors in detecting colors and shapes, so they are good at detecting lane markers, road signs, other vehicles, and various other objects in the driving environment.

Another Layer of Accuracy

Of course, cameras have their limitations—especially in poor lighting or weather conditions. That’s why the cameras in AVs are supplemented with inputs from other sensors, such as radar and lidar, to provide a more comprehensive view of the vehicle’s surroundings.

Compared to camera sensors, radar works reliably well in low-visibility conditions such as rain, snow, and fog. However, unlike cameras, radar is not good at modeling the precise shape of an object.

Lidar, an acronym for Light Detection and Ranging, is a technology that is similar to radar, but uses laser light pulses instead of radio waves to measure distance and create a 3D map of its environment.

Putting it All Together

The process of combining inputs from all sensors (cameras, radar, and lidar) to create an image of the world is called “sensor fusion”.

There are two main types of sensor fusion, called early and late sensor fusion, which indicate at what point data received from the various sensors is combined. In early sensor fusion, raw data is fused and then object detection algorithms are applied. In late sensor fusion, object detection algorithms are applied to the data before fusing the resulting 3D maps.

While early sensor fusion is the industry standard, Mobileye’s self-driving system uses late sensor fusion. We also employ sensor redundancy to create independent models of the road environment that can each provide the perceptual information needed to drive the AV if one system fails.

Making Sense of it All

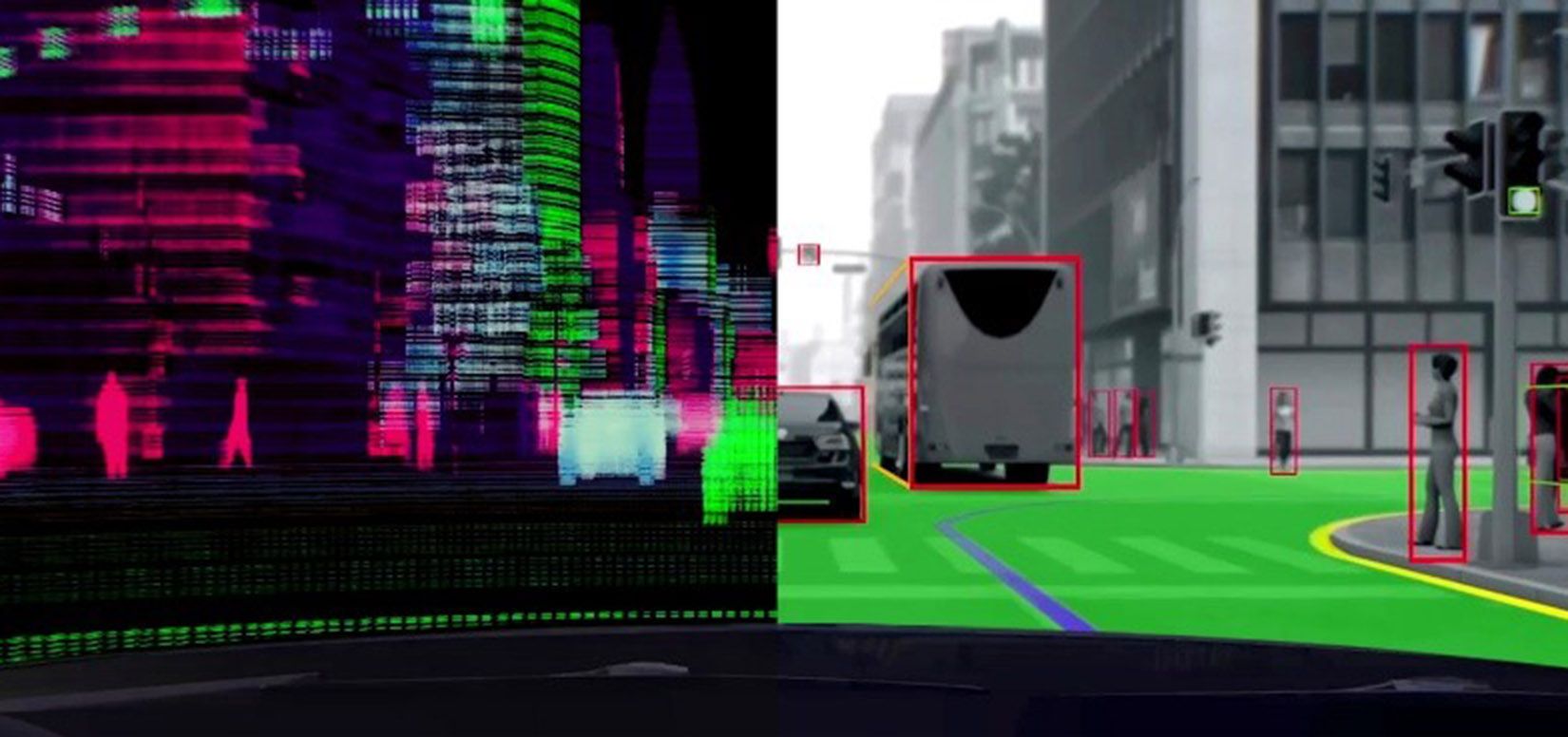

Once sensor data has been received, the car’s onboard computer needs to make meaning of it. Is that a billboard or a truck? Where is the edge of the road?

A whole subfield of artificial intelligence, called computer vision, is dedicated to this task. This has been one of the main areas of focus at Mobileye for over two decades.

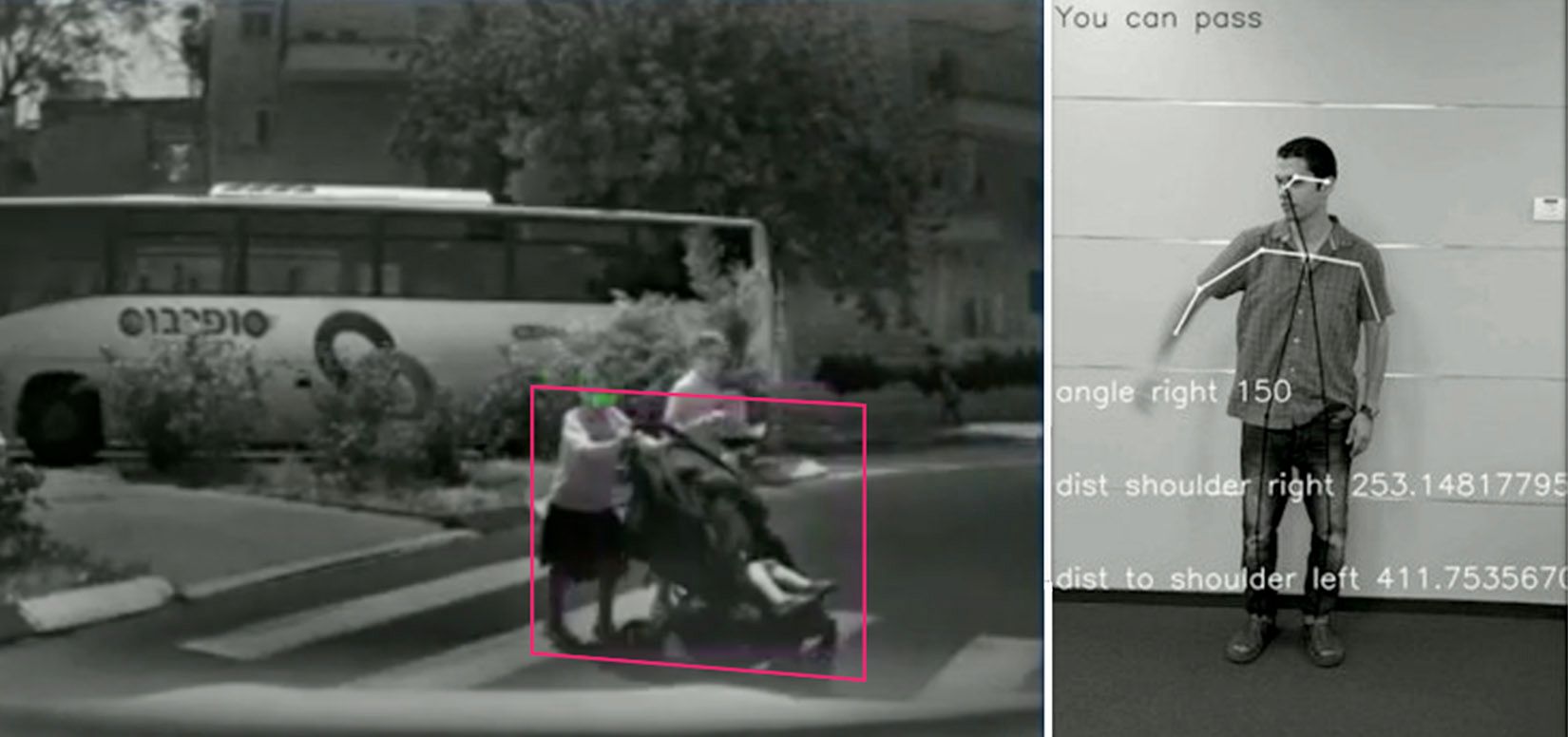

Computer vision algorithms—which include object recognition, pattern recognition, clustering algorithms, and more—are applied to the data, allowing the car to detect lane markings, street signs, traffic lights, and other objects in the environment.

Custom algorithms are even developed to detect such situations as open car doors and the hand signals of traffic police.

Precisely Locating Itself on a Map

Localization, another essential layer of the self-driving stack, is the process of determining a vehicle's precise location relative to its surroundings, which is essential to enabling the car to make informed driving decisions and plan a trip.

GPS is commonly used for initial localization, but it has limitations in terms of accuracy, especially in urban environments with tall buildings that can interfere with satellite signals. When safety is critical, localization has to be accurate within centimeters, not meters and inertial sensors, cameras, lidar, and radar provide more precise location information.

The use of high-definition maps is another important step in the process of localization because they contain exact information about the location of static features of the road such as curbs and pedestrian crossings. This allows the vehicle to more accurately determine its position relative to the road and “fill in the gaps” where sensor data may be incomplete.

Once it obtains an accurate map of the road environment, the AV uses various algorithms to determine its exact location and orientation. The algorithms take into account factors such as the car's velocity, steering angle, and other sensor readings to calculate the vehicle's precise location.

Mobileye’s approach to mapping, Road Experience Management™ (or REM™), rather than using a static HD map, uses anonymous data crowdsourced from around the world, resulting in dynamic, continuously updated maps.

The maps generated by Mobileye’s REM technology provide a richness of map semantics, which means that our mapping technology goes an important step further than most mapping technologies–it also captures how drivers use roads and the environment around them. For example, using REM, a vehicle is not only able to perceive a traffic light, but “understands” which lane (or lanes) is associated with it.

The Planning Layer of Autonomous Driving

If you are a self-driving car and you know what the world looks like and where you are exactly, you’ve come a long way. Now, you just need to make decisions based on this world model and create a plan to get to your destination. That’s where the next layer of the self-driving stack comes in.

When planning a trip, the AV needs to plan a route to get from point A to point B. However, in AVs, the planning layer consists of many types of planning—including motion planning, decision-making, collision avoidance, and behavioral planning.

For instance, collision avoidance algorithms ensure the vehicle avoids moving and static objects on the roadway to keep everyone safe. Other algorithms that are part of the planning layer ensure that occupants perceive the trip as safe and comfortable (i.e., no sudden accelerations or braking) and that the car drives according to traffic laws.

Motion planning algorithms make decisions about such maneuvers as lane changes and merges. The AV also needs to take into account what objects in the roadway might do, which is referred to as behavioral planning.

Mobileye has added an extra layer of safety to the planning process with Responsibility-Sensitive Safety™ (or RSS™). Our open mathematical model for AV safety and the basis for our driving policy, RSS is based on transparent and verifiable rules for balancing cautious and assertive driving.

Controlling the Car

Once the car’s computer has determined how to safely move based on sensor information, perception, and planning, it now needs to control the vehicle in the same way that a human driver would. Just as a human brain controls the movements of our limbs to allow us to navigate our world, an AV’s computer also controls the brakes, steering wheel, accelerator, and other components.

In celebration of AV Day, we've delved into some of the inner workings of this life-changing technology. From sensors and algorithms to crowd-sourced mapping and behavioral planning, self-driving cars have come a long way.

Imagine a future where road accidents are a thing of the past and time spent driving is transformed into productivity, leisure, and family time.

At Mobileye, this vision is fast becoming reality, and we’re excited about what that means for the future of mobility.

Share article

Press Contacts

Contact our PR team