blog

|

April 16, 2023

Our Camera-First Approach to Enhancing Mobility

For capability and cost, cameras can’t be beat. That’s why Mobileye embraces a camera-first approach towards sensing for both assisted and autonomous driving.

Mobileye pioneered the use of a single camera to enable advanced driver-assistance systems.

There are many things we take for granted, but when it comes to cars at least, things weren’t that way until someone made them so.

Cars today are a fact of life, for example, but they didn’t exist before Gottlieb Daimler and Carl Benz effectively invented them. By now we see cars everywhere, but they weren’t mass-produced until Henry Ford came up with the assembly line. These days almost all cars come with some degree of advanced driver-assistance system, and most such systems are based on cameras... but that too wasn’t the case until Mobileye pioneered the concept. And that camera-first approach remains at the heart of everything we do.

Historical Vision

When Mobileye began nearly a quarter-century ago, driver-assistance technology was still in its infancy (and autonomous driving, by extension, was little more than a pipe dream). Some automakers were starting to introduce basic ADAS features, but the industry had yet to settle on what type of sensors to use for them.

One major manufacturer was working on a camera system for measuring the distance to the vehicle ahead, and invited a computer science professor named Amnon Shashua to provide his input. Shashua showed that he could do with just one camera what they were trying to do with two. That successful demonstration led to his founding Mobileye and paved the way for advanced driver-assistance systems that could (and indeed would) be deployed at a scale great enough to revolutionize automotive safety.

The reason why that automaker called upon Shashua was due to the expertise for which he was already becoming known in the emerging field of computer vision. This form of artificial intelligence employs highly sophisticated algorithms to interpret the inputs from simple cameras in order to understand their surroundings.

To this day, that same core competence still drives us here at Mobileye, where teams of engineers and developers are constantly honing our computer vision algorithms to better identify a wider range of parameters in the driving environment, and developing the chips to put that technology into cars and out on the road.

So why do we still embrace a camera-first approach above all other types of sensors? For two main reasons, as our CTO Prof. Shai Shalev-Shwartz outlines in the video below.

Driving by Vision

As drivers, we make use of nearly all our senses when behind the wheel. We feel how the car interacts with the road, hear other cars coming and horns honking, and can even smell if something has gone wrong (like an engine overheating). But no sense is as essential to us as sight, and cameras are the sensors that function most like the human eye.

Cameras can “see” the parameters of the road surface and the various obstacles, hazards, and other users on and around us – including other cars, trucks, bikes, and pedestrians. Cameras also provide rich semantic understanding of key details in the driving environment – such as identifying lane markings, recognizing colors on traffic lights, and even reading the text on two-dimensional traffic signs.

For their part, radar and lidar deliver certain key advantages over cameras, such as detecting objects beyond line of sight and in lower-lighting or bad weather conditions. But they cannot replace the essential functions that cameras perform.

Made to Scale

There’s a huge range in the prices of different types of sensors. Lidars, for example, are expensive, but cameras are relatively affordable. Building our technology around low-cost cameras affords us the rare simultaneous opportunity to increase both the availability and the performance of our solutions.

For the mass market, the low cost of camera sensors enables integration of our driver-assistance technology into more vehicles (without disproportionately affecting their purchase prices). Indeed, to date, more than 135 million vehicles have been equipped with our technologies, and that number is growing at a quickening pace.

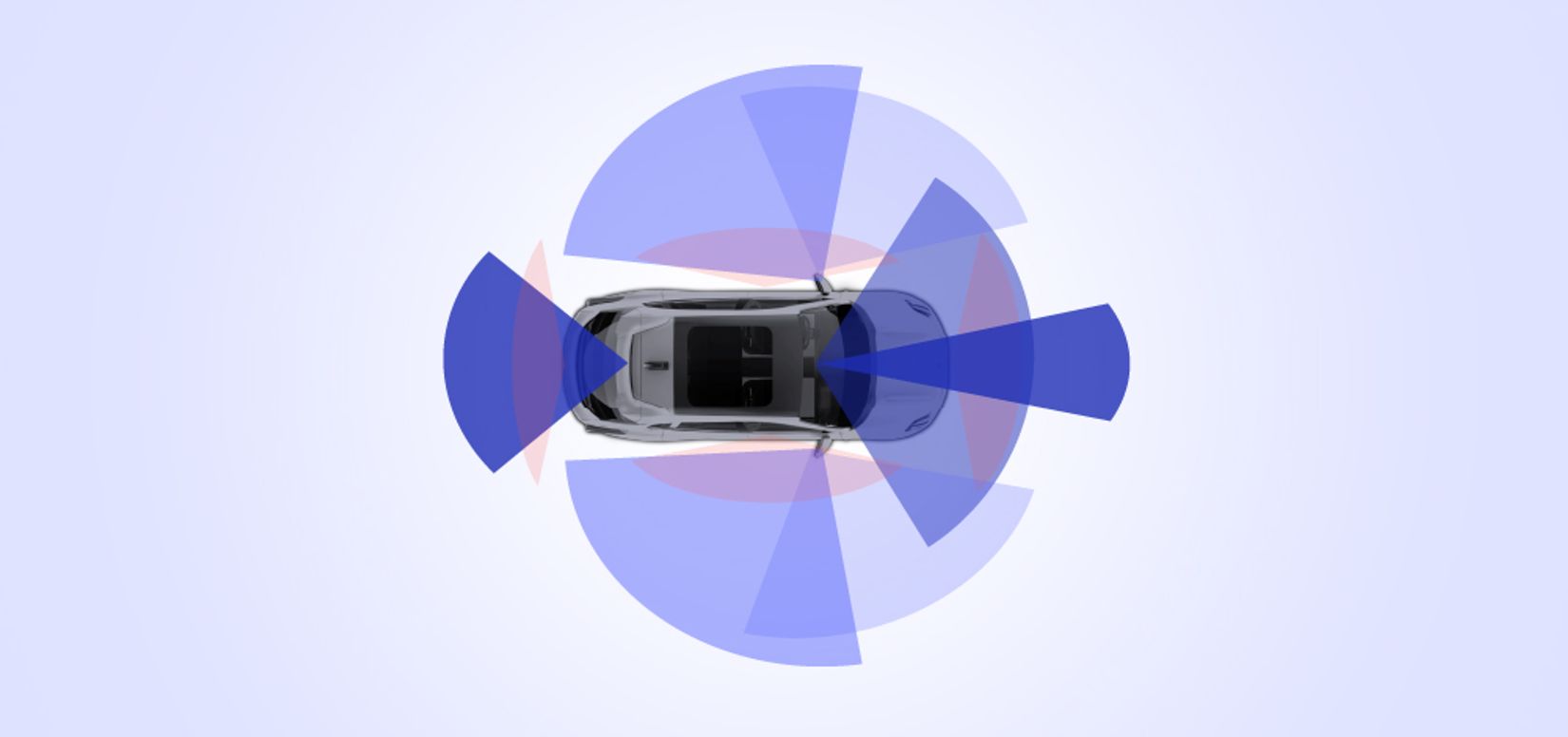

For higher-end vehicles, the low cost of camera sensors means that more of them can be cost-effectively integrated into advanced solutions. For example, Mobileye SuperVision™ (our eyes-on/hands-off solution) incorporates 11 cameras to provide 360-degree surround coverage.

Mobileye Chauffeur™ (our eyes-off/hands-off solution for consumer autonomous vehicles) and Mobileye Drive™ (our driverless solution for autonomous commercial vehicles) are similarly being developed under our camera-first approach. Only these solutions also feature a secondary, independent radar/lidar suite to back up the cameras (under our True Redundancy™ approach to sensing).

Even our REM™ mapping technology owes its crowdsourcing capabilities to the proliferation of our camera-based solutions. And that mapping data is itself being implemented into a range of applications, from our turnkey systems for self-driving vehicles through to human-driven cars augmented by our Cloud-Enhanced Driver-Assistance™ solution.

Mobileye will, of course, continue developing additional inputs like the maps and active sensors mentioned above. And there’s no telling what novel developments might emerge in the future. But between their inherent capability and the scale afforded by their low cost, cameras have always been – and remain still – at the heart of everything we do.

Share article

Press Contacts

Contact our PR team