blog

|

June 14, 2021

The Next Generation of Active Sensors for Autonomous Driving

Mobileye revolutionized camera-based computer vision. Now we’re doing the same with the development of our own cutting-edge radar and LiDAR sensors.

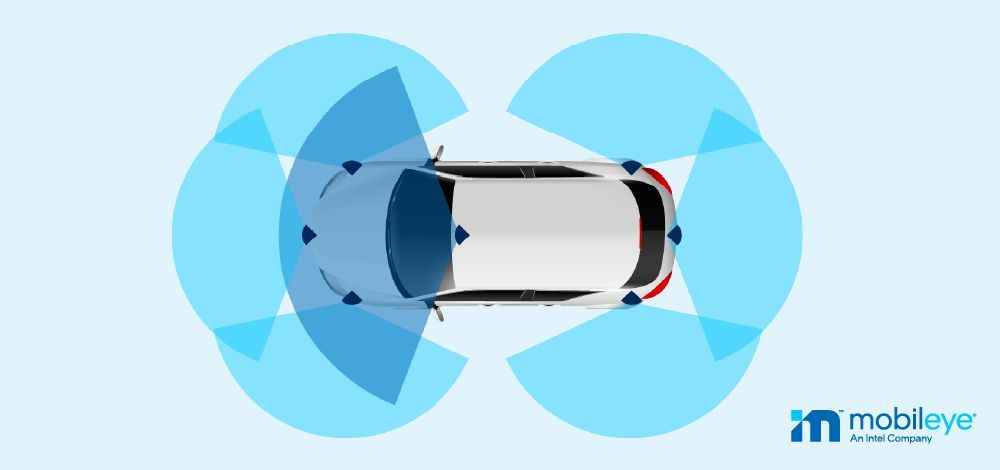

Radar/LiDAR coverage for self-driving system by Mobileye

Mobileye was founded on computer-vision technology, and we’re proud of what we’ve achieved through the combination of simple cameras and advanced algorithms. But if autonomous vehicles are to take over complete control from human drivers, they’ll require multiple types of sensors working in parallel. So, in addition to our camera-only developmental AV, we’ve also been testing another type using only radar and LiDAR. And we're developing each system – camera and radar/LiDAR – to be able to power the AV in complete autonomy of the other. But we soon found that the existing detection-and-ranging sensors on the market still leave much to be desired. That’s why we’re developing our own.

What are Radar and LiDAR?

Different from cameras which passively understand their environment, radar and LiDAR both work by actively emitting signals and measuring their returns. This allows them to detect other objects and road users and determine their range (or relative distance). Radar does this with radio waves, while LiDAR employs infrared light. Both types have been widely embraced across the autonomous-vehicle industry for their unique respective capabilities, each filling in the blind spots left by the other. Even at this basic level, however, Mobileye’s approach differs fundamentally from that more commonly practiced across the industry.

True Redundancy™

Most companies pursuing autonomous-vehicle technology feed information from all three types of sensors – cameras, radars, and LiDARs – into one sensing system. That system then produces a single model of the environment, in a method known as “sensor fusion.” Mobileye’s differentiated approach of True Redundancy creates two parallel AV sub-systems, with two independent models of the driving environment – one from cameras, one from radar and LiDAR – each operating independently of the other. This method is simultaneously more robust and more streamlined, while offering a key failsafe.

Mobileye’s innovation, however, runs much deeper than how we process the data from the radar and LiDAR to the essential types of these sensors we’re employing. Utilizing the expertise of our parent company, Intel, we’re developing new, more advanced versions of both types of sensors, in a more cost-effective setup, specifically designed for self-driving cars.

Software-Defined Digital Imaging Radar

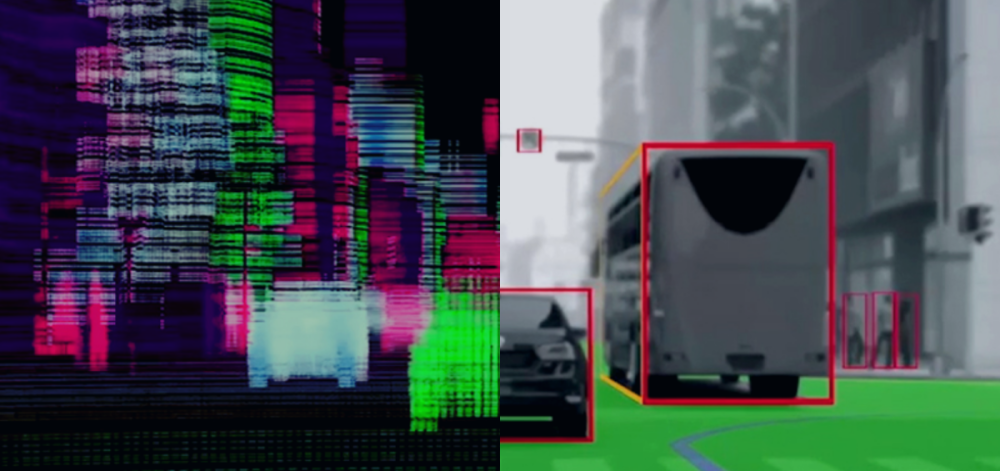

Moving radar sensors into the digital age, our software-defined digital imaging radar promises to deliver true imaging in 4D, with much higher resolution, dynamic range, and level of accuracy than conventional analog radar. This paradigm shift in architecture enables a veritable leap in performance – increasing the probability of detection, while reducing the clutter of echoes. It also allows us to detect weaker targets from farther away… even in the presence of stronger targets that are closer or in the same range and doppler, while effectively handling interferences.

Software definition enables greater flexibility. Complex, proprietary algorithms, coded onto a dedicated System-on-a-Chip (SoC), allow for far lower processing power than the twelve-fold increase in resolution would otherwise dictate. And because ours is a true imaging radar, we’re able to process what the radar detects using methods adapted from our expertise in computer-vision technology.

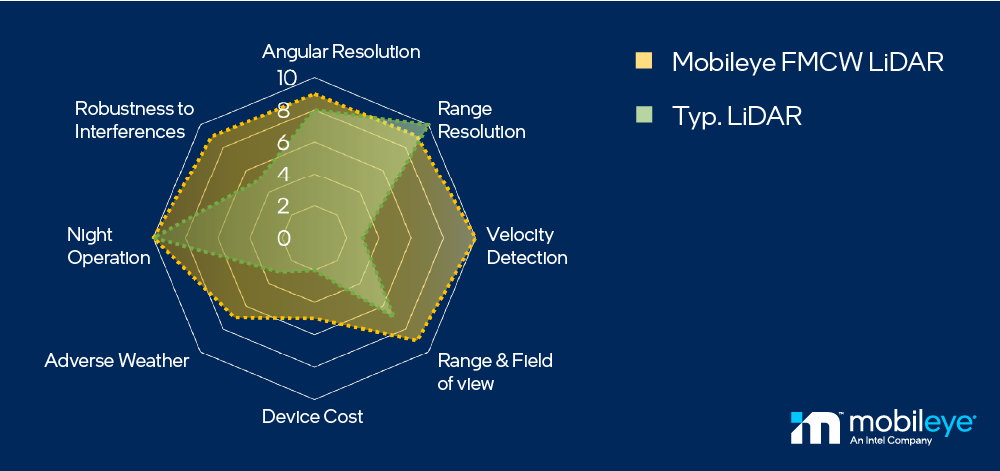

Silicon Photonics-based Frequency-Modulated Continuous Wave LiDAR

The next frontier in LiDAR technology, Frequency-Modulated Continuous Wave (FMCW) LiDAR presents a striking array of advantages over conventional time-of-flight (ToF) LiDAR systems. To the LiDAR’s typical capability of sampling range, elevation, and azimuth (or relative trajectory), FMCW adds velocity, elevating the most advanced type of self-driving car sensor from 3D to 4D. This allows for the quick identification of incoming small and fast targets (like motorcycles) at farther distances, measuring the headings of detected objects more reliably, and enriching the AI algorithms with additional velocity information.

FMCW LiDAR is also less sensitive to interference from sunlight, reflections, and other LiDAR units. And because it sends a continuous wave of light instead of short pulses, FMCW LiDAR can operate at lower and safer power levels while achieving higher detection and effective dynamic ranges, minimizing unwelcome artifact interference from retroreflectors (like traffic signs and license plates).

The Dream Team to Make It Happen

Mobileye and Intel’s combined competences put us in a unique position to advance the development of these complex-to-engineer, cutting-edge active sensors and bring them to market. Intel has a wealth of experience in developing software-defined infrastructure that forms the basis of our new radar. It also has the rare silicon photonics fab to integrate both active and passive components onto a single chip to underpin our LiDAR. And Mobileye has the proven expertise in both automotive applications and digital-imagery analysis to implement these highly advanced sensors for autonomous driving.

Savings Through Advancement

In order for self-driving vehicles to transition from science experiment to broad adoption, as we’ve long advocated, the costs must be reduced to a practicable level. Unfortunately, LiDAR is inherently a relatively expensive type of autonomous vehicle sensor, costing roughly ten times as much as radar. And we don’t anticipate this cost to come down significantly anytime soon.

Rather than making the most of the cheapest available sensors to reduce overall cost, we’re increasing the radar’s capabilities, which allows us to rely less on cost-intensive LiDAR. Accordingly, our road map is aiming toward 360-degree coverage by both cameras and digital imaging radar around the vehicle, requiring only a single front-facing FMCW LiDAR with very high resolution, allowing it to map the drivable space and to detect small targets further ahead.

The Future of Autonomous Driving

Using the best radar and LiDAR sensors currently available, we remain focused on our timeline of bringing our autonomous-vehicle platform to market by the end of next year – first in on-demand self-driving Mobility-as-a-Service, which in turn will pave the way towards mass-produced consumer AVs. But with development of our next-generation radar and LiDAR sensors steaming ahead, we’re looking at the next step in the evolution of our AV sensing suite. Look for our software-defined digital imaging radar and Silicon Photonics-based FMCW LiDAR to further advance our AV platform in 2025.

Share article

Press Contacts

Contact our PR team