blog

|

May 22, 2023

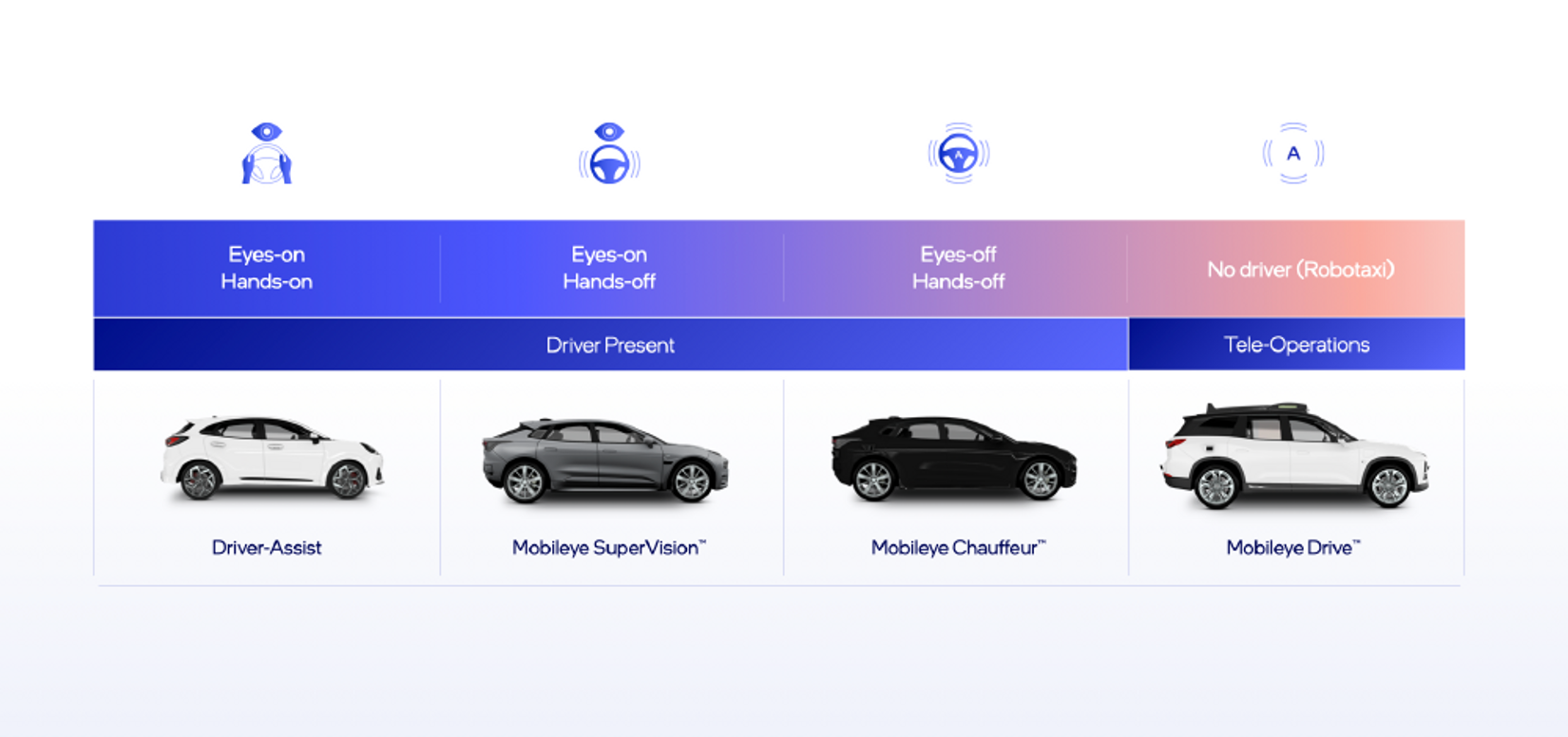

Autonomous Driving Levels: Hands Off, Eyes Off - A New Taxonomy

Instead of autonomous driving levels, our new taxonomy defines assisted and autonomous driving systems by degree of driver involvement.

Mobileye formulated a new framework for assisted and autonomous driving to be understandable by everyone.

Language matters. Words have power. They help us to define things properly, to understand them, and to understand one another. So, when the existing lexicon leaves too much room for confusion, we need new terminology that clarifies and simplifies the subject matter. And that is precisely what we outlined at CES earlier this year: a new taxonomy for assisted and autonomous driving that’s both accurate and easy to understand.

“The discourse today is Level 2, Level 3, Level 4.... This taxonomy is good for engineers,” Mobileye CEO & founder Prof. Amnon Shashua said at CES 2023. But what we really need is “a product-oriented language. So, we created our own language which says that there is eyes-on/eyes-off, there is hands-on/hands-off, there is a driver or no driver. That’s it.”

Here’s the logic behind this new taxonomy, and how it applies to various types of driving systems.

Simplifying the Relationship Between Driver and Vehicle

Until now, the capabilities of assisted and autonomous driving technologies have been categorized into six levels of driving automation. That taxonomy was first defined in 2014 under SAE J3016, a standard issued by SAE International (formerly known as the Society of Automotive Engineers). At one end of the spectrum sits Level 0, with no significant form of driver assistance whatsoever. At the other end, Level 5 autonomous driving describes vehicles capable of operating autonomously anywhere and everywhere. Everything else falls into one of the levels in between.

The SAE levels of automation have been adopted widely across the industry, and arguably represent the most useful taxonomy we’ve had until now. But does these autonomous driving levels clearly and effectively convey a vehicle’s capabilities? Will the layperson understand where their responsibilities as a driver end and where the vehicle’s begin (without either a chart or an in-depth understanding of the technology)?

As the technologies have developed and evolved, the autonomous driving levels are no longer the most effective way of characterizing a vehicle’s automation – particularly with the emergence of L2+, the lack of clarity regarding the man/machine interaction under L3, and the practical differences between L4 and L5 having been diminished by widespread mapping.

So instead of levels of automation defined by engineers for engineers, we set out to characterize the relationship between human and machine based on the simple questions that matter most to drivers (among others), namely:

1. Does the driver need to keep hands on the wheel?

2. Does the driver need to keep a watchful eye on the road?

3. Does the vehicle even need a driver at all?

The answers to these questions clearly define which responsibilities rest with the driver and which with the vehicle, and in what types of driving environments.

Terminology in Action

The baseline assumption governing automobile operation over most of its history has been that a human driver is solely responsible for controlling the vehicle and watching the road at all times – hands-on, eyes-on. But that’s begun to change with the advancement of driver-assistance systems and the development of autonomous vehicles.

With a solution like Mobileye SuperVision™, for example, the driver can take hands off the wheel and let the vehicle operate itself on all regular road types. But responsibility and overall control still rest with the driver, who must supervise the vehicle’s operation at all times. Mobileye SuperVision, then, is a hands-off/eyes-on system.

For Mobileye Chauffeur™, we add active sensors (such as radar and lidar) to the computer vision, specialized crowdsourced maps, and lean driving policy that go into Mobileye SuperVision. These redundant active sensors will allow drivers to take not only take their hands off the wheel, but their eyes off the road as well – within specific driving environments, or what engineers call Operational Design Domains. (Like a vacuum cleaner may be designed to work indoors and a lawnmower outdoors, a system might be restricted to driving autonomously only on certain road types as its Operational Design Domain expands.)

Mobileye Drive™, meanwhile, further builds upon the capabilities of Mobileye Chauffeur with the addition of a tele-operation system. That added capability is incorporated to handle the few remaining rare cases where human involvement may be required, allowing for the removal of the driver from the equation entirely.

Sounds simple enough, right? We certainly hope so. Because while the technologies that go into these systems are highly complex, we believe their capabilities need to be expressed as simply and clearly as possible – not just for the benefit of those developing the technologies, but for the general public who will be using them as well.

Share article

Press Contacts

Contact our PR team