opinion

|

February 06, 2023

Defining a New Taxonomy for Consumer Autonomous Vehicles

Mobileye’s CEO and CTO detail the new taxonomy revealed at CES 2023 for deploying eyes-off/hands-off self-driving consumer vehicles.

Prof. Amnon Shashua and Prof. Shai Shalev-Shwartz

Mobileye's new taxonomy for autonomous vehicles is based on defining the interaction between man and machine.

Tech and auto companies had a very turbulent 2022 in almost every aspect. In spite of great turmoil, the automobile industry has shifted gears in its pursuit to adopt and deploy consumer-level autonomy in the near future. A number of the industry conventions around autonomous driving have again become unclear and ambiguous as a result of this development. The confusion threatens to obscure the real benefits of autonomy in terms of safety, convenience, and efficiency.

We see a growing need for a new way of talking and thinking about consumer AVs (CAV) that recognizes how they will work in the real world, and ensures the usefulness, safety, and scalability of consumer-level AVs. To achieve that, Mobileye has laid down a new taxonomy alongside a set of basic requirements for CAV, which was presented in our yearly address at the last CES.

The Need for Clarity

Autonomous driving is viewed mainly through the prism of SAE Levels of autonomy, also known as J3016, which is widely accepted as the industry standard. When introduced in 2014, the SAE J3016 provided a very good reference for AV development and regulation while everybody was still wrapping their minds around the question of “what is autonomous driving, exactly?”. However, as we move forward with the productization of autonomous and highly automated systems, it is evident that the current Level 1-5 taxonomy cannot form a basis for a product-oriented description that is clear for both the engineer and the end customer.

When looking at the current industry discourse, we see two issues that need to be addressed. The first issue is vague and unclear definitions from an end-user perspective. Second and more important is the unnecessary distinction between Level 3 and Level 4. According to J3016, Level 3 and Level 4 differ in the Minimum Risk Maneuver (MRM) requirements and the vigilance level of the human driver. This may lead to “failures by design” of the autonomous system, as demonstrated in an opinion paper we published in 2021.

Simpler Language

To deal with the deficiencies depicted above, we propose a simplified language that defines the levels of autonomy based on four axes: (i) Eyes-on/Eyes-off, (ii) Hands-on/Hands-off, (iii) Driver versus No-driver, and (iv) MRM requirement. As seen in the chart below, this creates four product categories covering the entire spectrum of automated driving.

1) Eyes-on/Hands-on: this category covers all the basic driver-assist functions, such as Autonomous Emergency Braking (AEB) and Lane Keep Assist (LKA). The driver is still responsible for the entire driving task while the system monitors the human driver (Level 1-2 according to SAE).

2) Eyes-on/Hands-off: this is a driver-assistance function where the driver’s hands can be off the steering wheel while the system takes control of the driving and the driver supervises the system (hence, Eyes-on) within a specified Operational Design Domain (ODD). With a proper driving monitoring system (DMS), one can create a very useful human/machine synergetic interaction (analogous to pilots supervising the auto-pilot system in an aircraft) and increase the overall safety of driving. This is usually referred to as Level 2+, a term that was first coined by Mobileye and not formally defined by SAE. Due to the absence of the Eyes-on/Hands-off category from the SAE taxonomy, it is usually wrongly classified as Level 3-4. It is important to emphasize that the role of the “supervisor” changes from (1) to (2): in an Eyes-on/Hands-on system it is the system that is supervising the driver and intervening (rarely) to avoid an accident (like applying the brakes to avoid collision). It is important that interventions by the system happen rarely and in emergency situations rather than a continuous interference with the human driver. In an Eyes-on/Hands-off system it is the human driver who is supervising the system. To be effective, the interventions should happen rarely, and in order to keep the human driver vigilant, a proper DMS should be in place. An Eyes-on/Hands-off setting increases safety since the failure modes of the human and the system are very different: human failures are mostly concentrated on lack of attention and distraction that can happen in good weather and mundane road conditions whereas system failures mostly occur in challenging environments (weather, road types, and traffic maneuvers).

3) Eyes-off/Hands-off: the system controls the driving function within a specified ODD (say, highways with on/off ramp transitions) without the human driver needing to supervise the driving (hence, Eyes-off). Once the ODD comes to an end, and if the driver does not take back control, the system is able to conduct a full MRM and stop safely on the shoulder of the road. This category can be classified as either Level 3 or Level 4 according to SAE J3016. It is worth noting that J3016 exempts the Level 3 system from performing full MRM by allowing it to stop in-lane, but we argue that a stop-in-lane emergency maneuver is not safe. In addition, the Eyes-off/Hands-off system still requires a qualified driver sitting in the driver’s seat so he/she will be able to take control in non-safety-related situations happening at zero velocity in order not to jeopardize the flow of traffic (e.g., deadlocks, policeman directing traffic, etc.).

4) No Driver: when there is no human driver present, say in a Robotaxi, the role of the human driver is replaced by a teleoperator who can intervene to resolve non-safety situations like those mentioned above.

In the simplified language we propose above, the requirements from the driver are well defined, so there are no ambiguities from the end-user perspective. The human driver is either supervised by the system or is supervising the system or is allowed to disconnect attention entirely without being bothered by the system. The Eyes-off category translates to a full MRM capability of safely stopping on the shoulder of the road without blocking traffic. The value proposition of an Eyes-off setting is time, i.e., the human in the driving seat can legally attend to non-driving matters, within the prescribed ODD, without the need to supervise the system.

Usefulness, Safety, Scalability

We contend that an Eyes-off system should be governed by three principles: (i) usefulness, (ii) safety, and (iii) scalability.

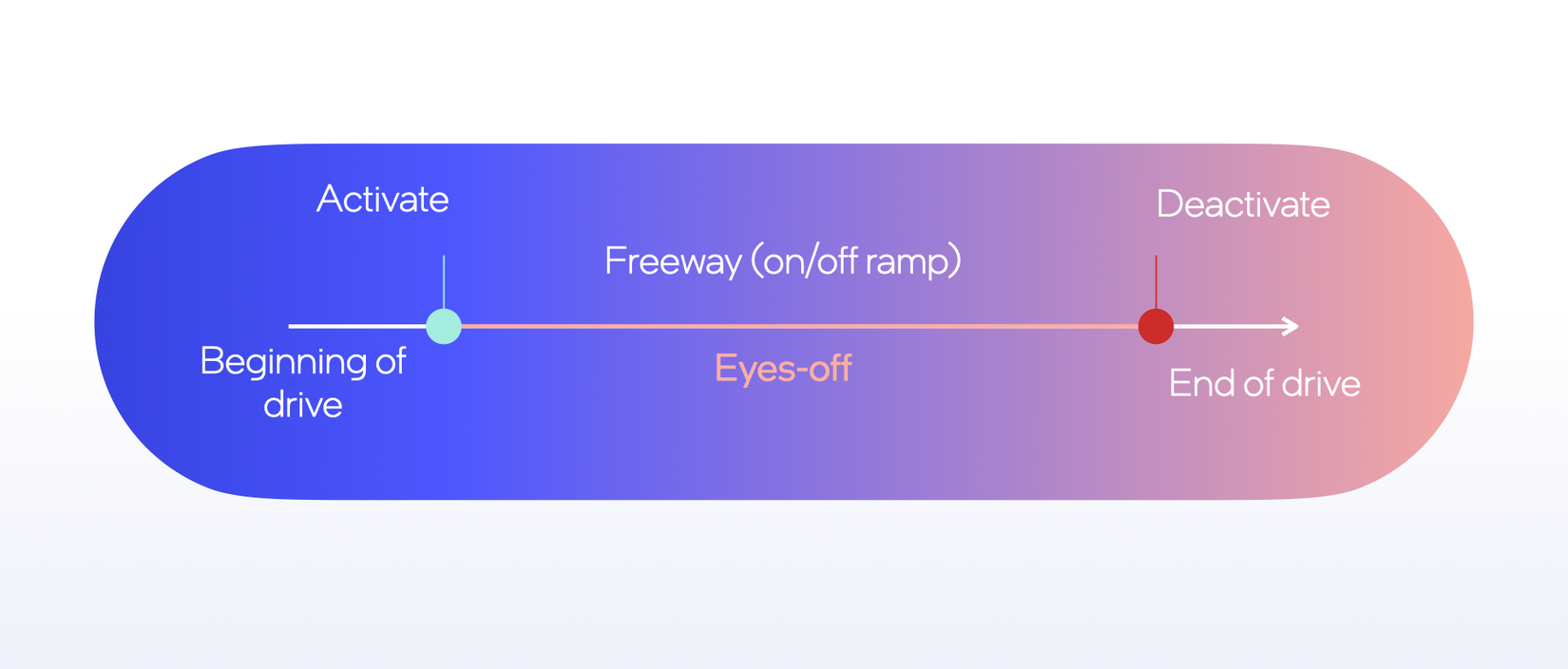

Usefulness: A good Eyes-off system should operate in an ODD that enables prolonged and continuous periods of use such that going in/out from an ODD does not happen frequently. As depicted in the figure below, if we look at the average person’s commute, going in/out the ODD should happen only once. According to this requirement, we set the minimum useful ODD threshold to be freeways up to 80 mph, including the ability to navigate on-ramps and off-ramps. Anything below that is considered not useful. For example, a system with an ODD of freeways up to 40 mph would require the driver to take control every time the lead vehicle exceeds 40 mph. This also poses a safety risk as it increases the friction between the human and the machine.

Safety: A safe Eyes-off solution should have no systematic errors, i.e., an error that can be reproduced in a certain emergency situation that is within the system’s ODD. Trying to hide behind statistics that the particular scenario will only happen rarely leads to unacceptable compromises in system design.

To better articulate the point, let's look at the ODD of current Level 3 systems coming to market – freeways-only up to 40 mph with no lane changes. Because of the low operating speed, the system should allegedly be able to cope with any in-lane emergency braking. However, out-of-lane emergency maneuvers – responding to dangerous cut-ins, for example – are considered rare and not supported by the sensor configuration, the sensing state, and driving policy algorithms. For example, a sensor configuration that does not include high-resolution 360-degree coverage cannot support a lane change under all traffic densities. Failing to do so creates a “reproducible error.”

The result of our requirement for no reproducible errors is that the system ODD should be much larger than the customer ODD. In other words, it is possible to limit the ODD to the customer to not allow lane changes in a regular mode of operations, but still, the system needs to be able to change lanes in an emergency maneuver. This notion should translate directly to the system’s design –high-resolution surround sensors, driving policy, high-definition maps, controllability, and so forth.

Scalability: We believe the operational domain of a full Eyes-off/Hands-off vehicle can best be thought of as a stack of ODDs – starting from highways, then adding arterial roads, signaled intersections, unprotected turns and so forth, that eventually add up to autonomy everywhere. We call those ODDs “autonomous blades,” and through this, we have built an evolutionary path of incremental steps in our products from Eyes-on/Hands-off systems to full AVs.

Without this approach, AV development simply doesn’t scale. Every blade of operation requires a “moonshot” of validation and engineering, and if that moonshot is successful, it doesn’t guarantee success in the next blade. Just because a prototype AV can manage city streets in daylight hours doesn’t mean it can easily adapt to multilane highways at 80 mph at night, and vice versa. Instead, we have focused our “moonshot” efforts on the Eyes-on/Hands-off system with full ODD as a baseline for eyes-off blades.

The Bridge to Consumer AVs

Our Mobileye SuperVision™ camera-only Eyes-on/Hands-off system already contains the entire technological backbone needed to enable hands-off driving, such that the transition to eyes-off blades only adds active sensors as redundant components to the perception system. All the heavy lifting of detailed sensing, the driving policy required to maneuver the car in any traffic scenario, and the requirement for HD maps covering all types of roads are all done in the SuperVision system. The redundancies to the perception system then become the only incremental work needed to make the leap from eyes-on to eyes-off.

Today more than ever, we believe in the potential for autonomous technology to transform the world and how we travel daily. We at Mobileye know many share that belief, and as we put our technologies like SuperVision on the road, we will see those benefits come to life – especially if our industry can clearly share what that future looks like and disambiguate as many uncertainties as we can.

Share article

Press Contacts

Contact our PR team