news

|

January 12, 2021

CES 2021: Under the Hood with Prof. Amnon Shashua (Replay & Live Blog)

Prof. Amnon Shashua takes a deeper and more technical dive into the company’s latest progress on automated driving technologies.

Prof. Amnon Shashua takes a deeper and more technical dive into the company’s latest progress on automated driving technologies.

This news content was originally published on the Intel Corporation Newsroom.

On the heels of Monday’s Intel news conference, Prof. Amnon Shashua takes a deeper and more technical dive into the company’s latest progress on automated driving technologies.

He gets under the hood of Mobileye Roadbook™ and how Mobileye is building a scalable, autonomous vehicle map that differs greatly from traditional high-definition maps, from how the data is harvested to the state-of-the-art algorithms that automate the map creation. He also discusses the company’s lidar ambitions, its RSS progress and the recent announcement of SuperVision™, Mobileye’s most advanced hands-free driving system.

Live Blog: Follow along below for real-time updates of this virtual event.

10:00 a.m.: Hello and welcome! This is Jeremy Schultz, communications manager at Intel, and thank you for tuning in for Prof. Amnon Shashua’s one-hour master class in Mobileye’s unique and fascinating approach to bringing autonomous vehicles (AVs) to the world.

In person or virtual, Amnon’s CES presentation has been one of my favorite hours at the big show the last few years running. Let’s ride into 2021!

Coming to us from Mobileye HQ in Jerusalem, it’s Mobileye CEO and Intel Senior VP Amnon Shashua taking the podium.

Amnon shared some big news during yesterday’s news conference, and today Amnon promises “a topic or two to deep dive.”

But first a quick business update.

10:01 a.m.: Mobileye’s business is based on three intermingling pillars:

- Driving assist, “which goes from a simple front-facing camera up to a 360, multi-camera setting with very advanced functions”

- “The data we collect from crowdsourcing” — now millions of miles per day — powering not only “our maps but also creating a new data business”

- The full-stack self-driving system: computing, algorithms, perception, driving policy, safety, hardware on up to mobility as a service.

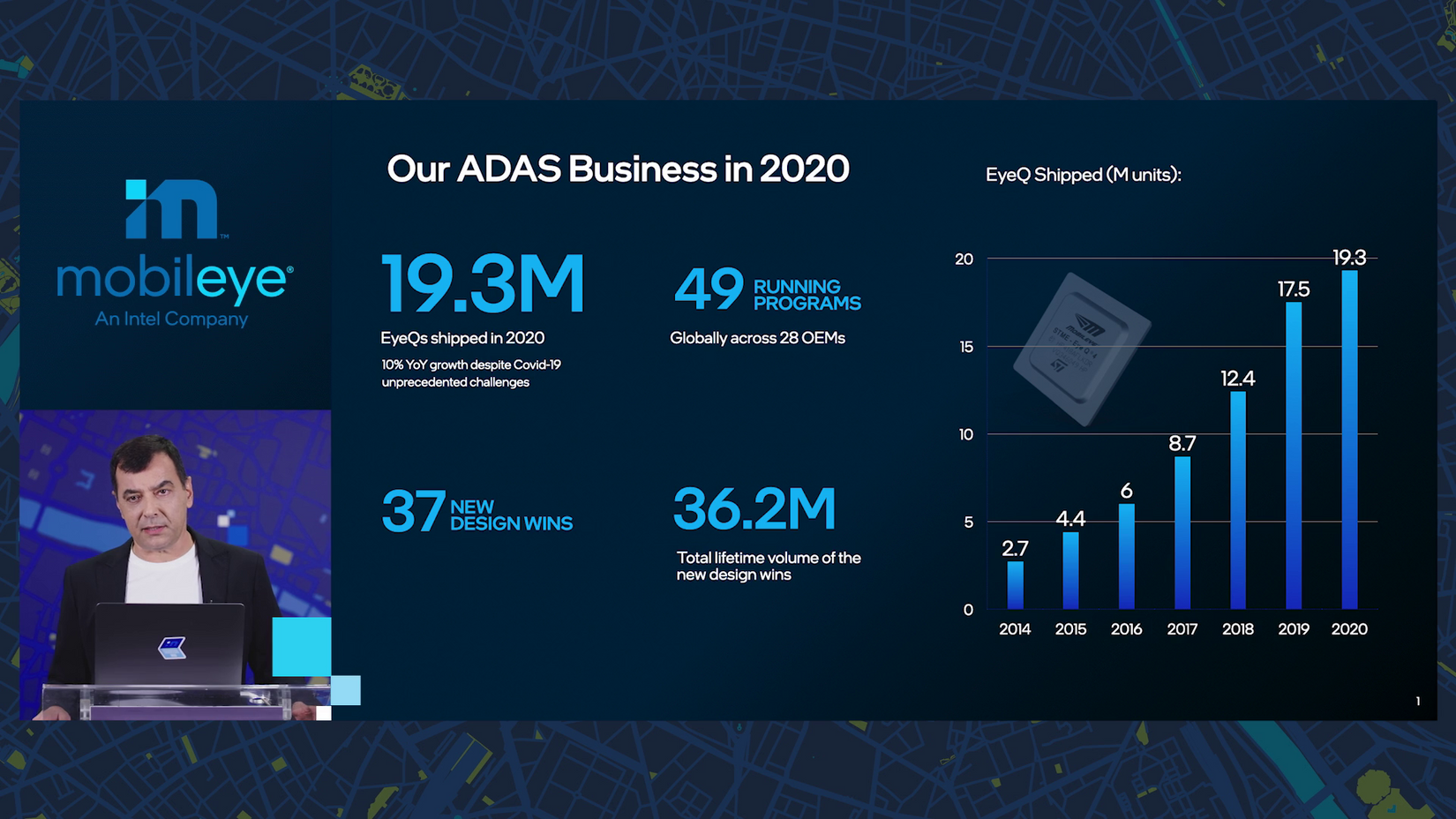

10:02 a.m.: ‘Twas a stop-and-go 2020: “we finished 2020 with 10% year on year growth in shipping EyeQ chips,” Amnon says, “very impressive in my mind, given that three months we had a shutdown of auto production facilities.”

Mobileye earned 37 new design wins — to account for an additional 36 million lifetime units — joining 49 running production programs.

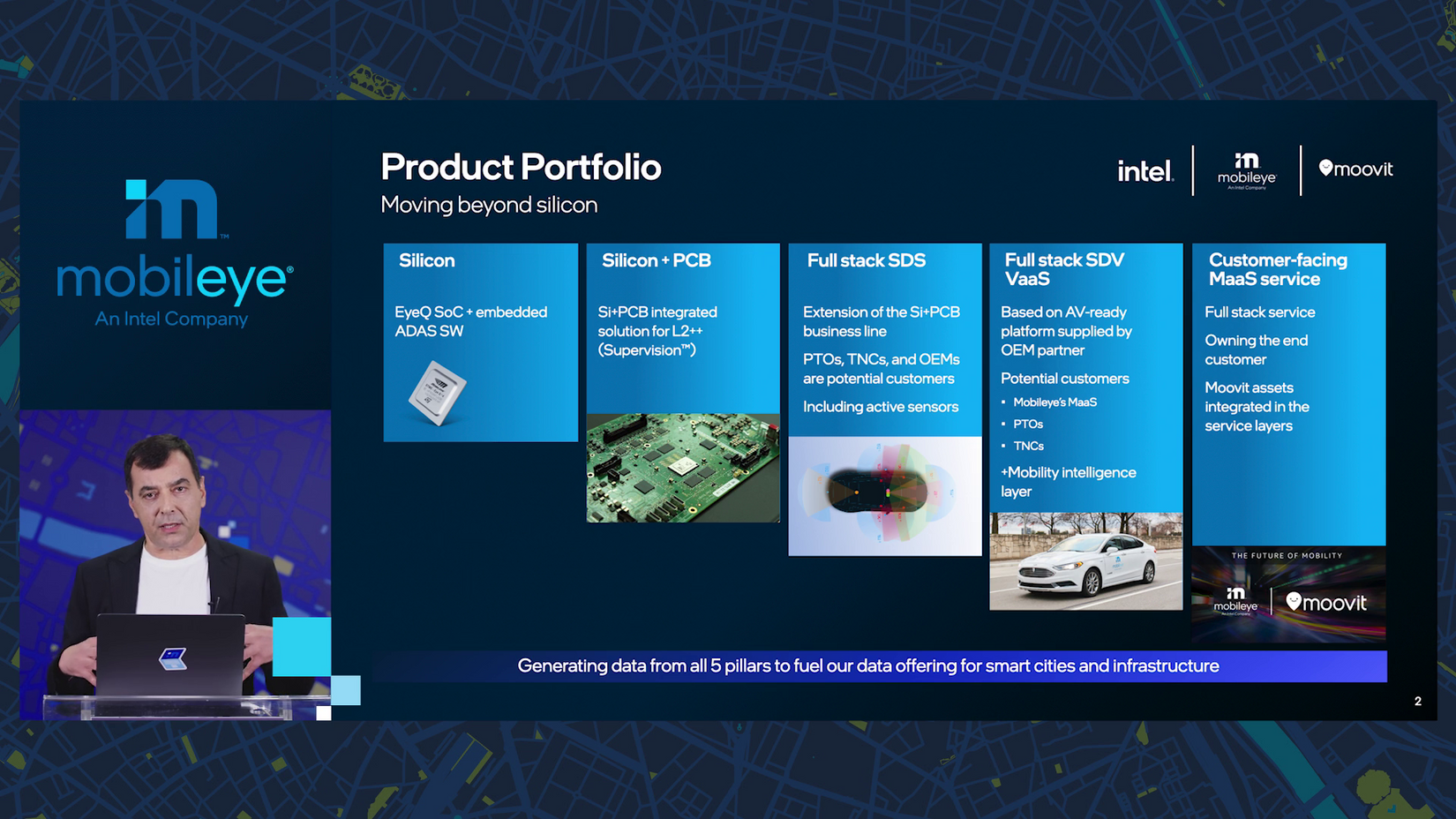

10:04 a.m.: Mobileye’s product portfolio has expanded, Amnon explains, including: EyeQ chips and associated software; a new domain controller; a full high-end driving assist board (PCB); the full-stack self-driving system with hardware and sensors; and finally, with Moovit, which Mobileye acquired in 2020, “the customer-facing portion of mobility as a service.”

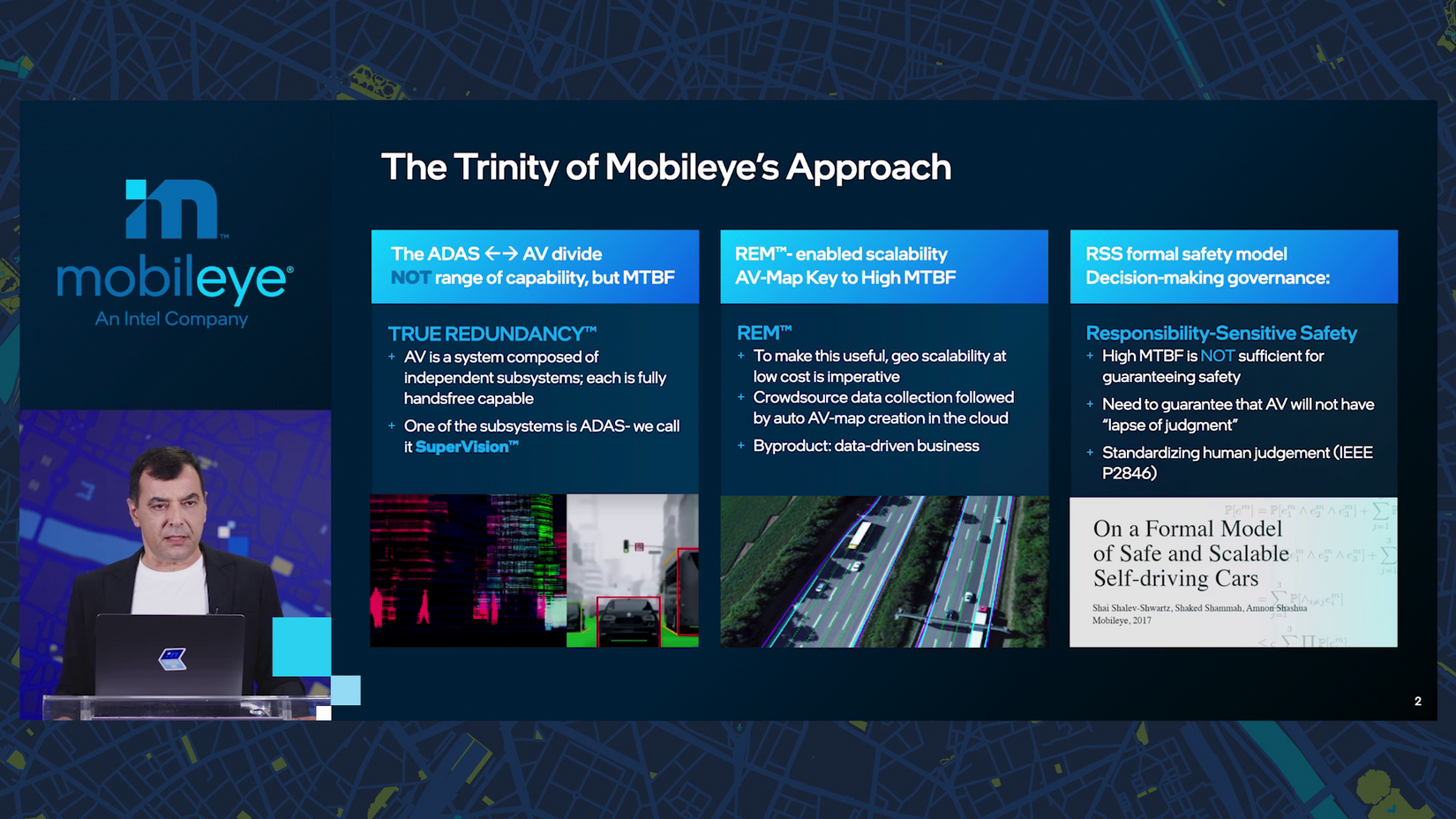

10:05 a.m.: “This slide, I think is one of the most critical slides in this presentation.”

“We call this the trinity of our approach, the three components that are very unique to how Mobileye sees this world.” Ooh, love a clever double meaning.

Firstly, Mobileye doesn’t see the difference between driving assist and autonomous driving in terms of capability or performance, but rather mean time between failures.

The same system can serve both functions, but “if you remove the driver from the experience, the mean time between failure should be astronomically higher than the mean time between failure when a driver is there.”

10:06 a.m.: “In order to reach those astronomical levels of mean time between failure, we build redundancies.”

This is achieved by having separate camera and lidar-radar subsystems — each capable of end-to-end autonomous driving alone, and each with its own internal redundancies. (Imagine if you could run as many alternative and parallel algorithms on the raw sensor input from your eyes as you wanted, like a Predator - Mobileye’s computers can and do.)

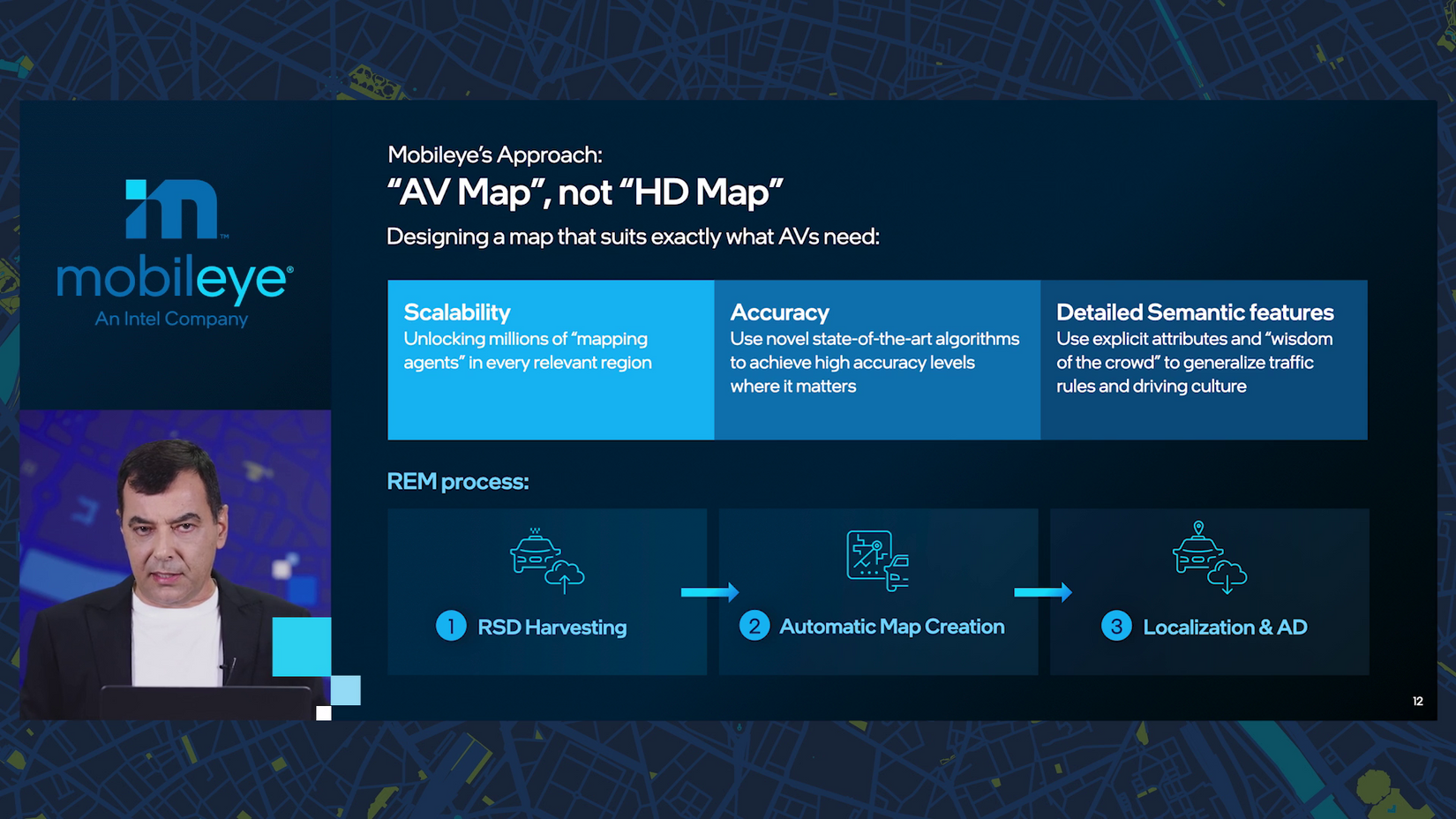

10:07 a.m.: Trinity component two: “the high-definition maps, which we call AV maps.” While other companies drive specialized vehicles to collect data, Mobileye crowdsources data from current cars on the road. “It is a very, very difficult task,” Amnon says.

“Today we passed a threshold in which all that development is becoming very useful to our business.” Mark that note.

10:08 a.m.: Trinity component three: safety.

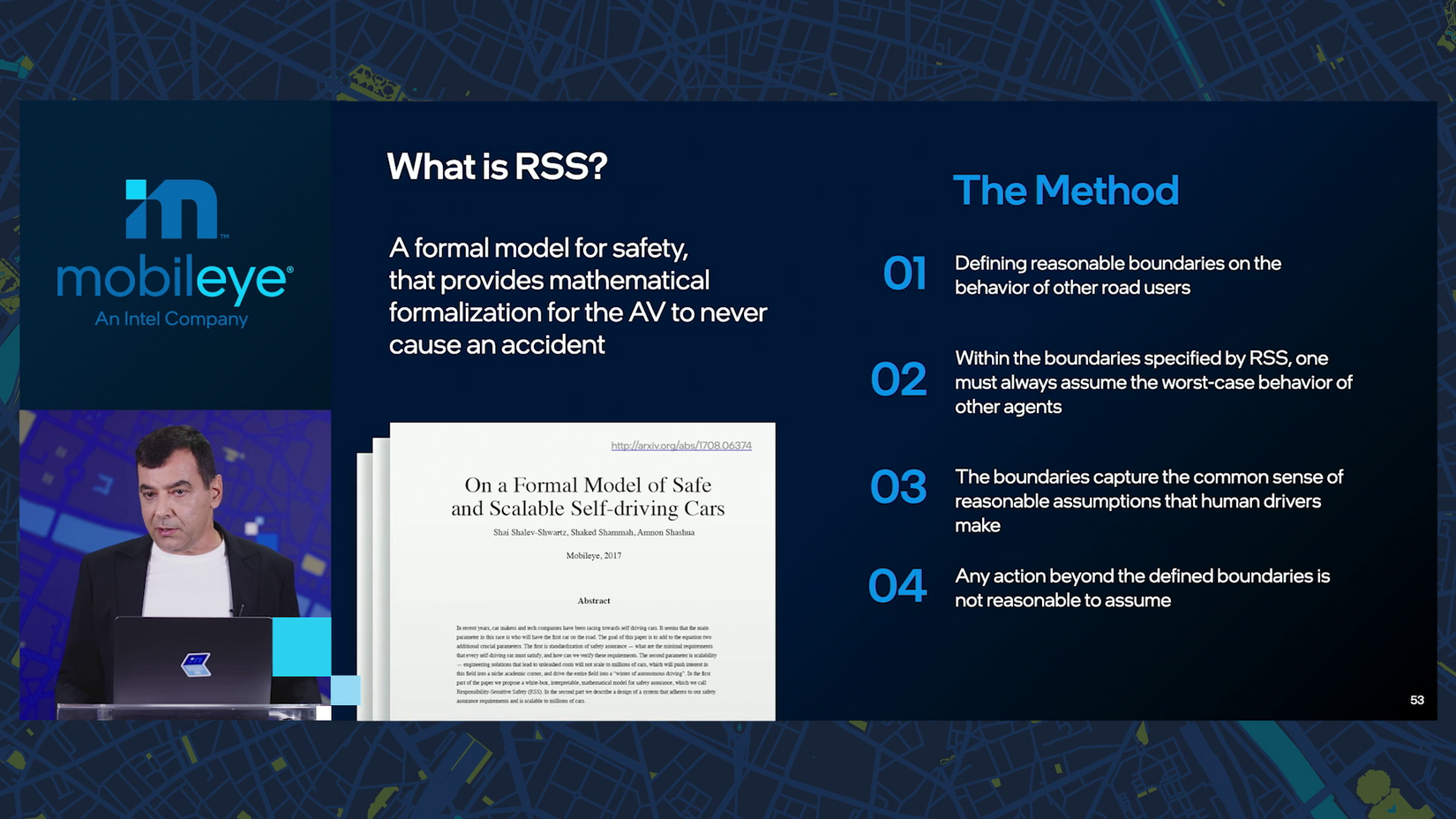

The car’s “driving policy” governs its decisions. “How do we define mathematically what it means to drive carefully?” Mobileye has an answer in RSS, and “we’re evangelizing it through regulatory bodies, industry players around the world with quite great success.”

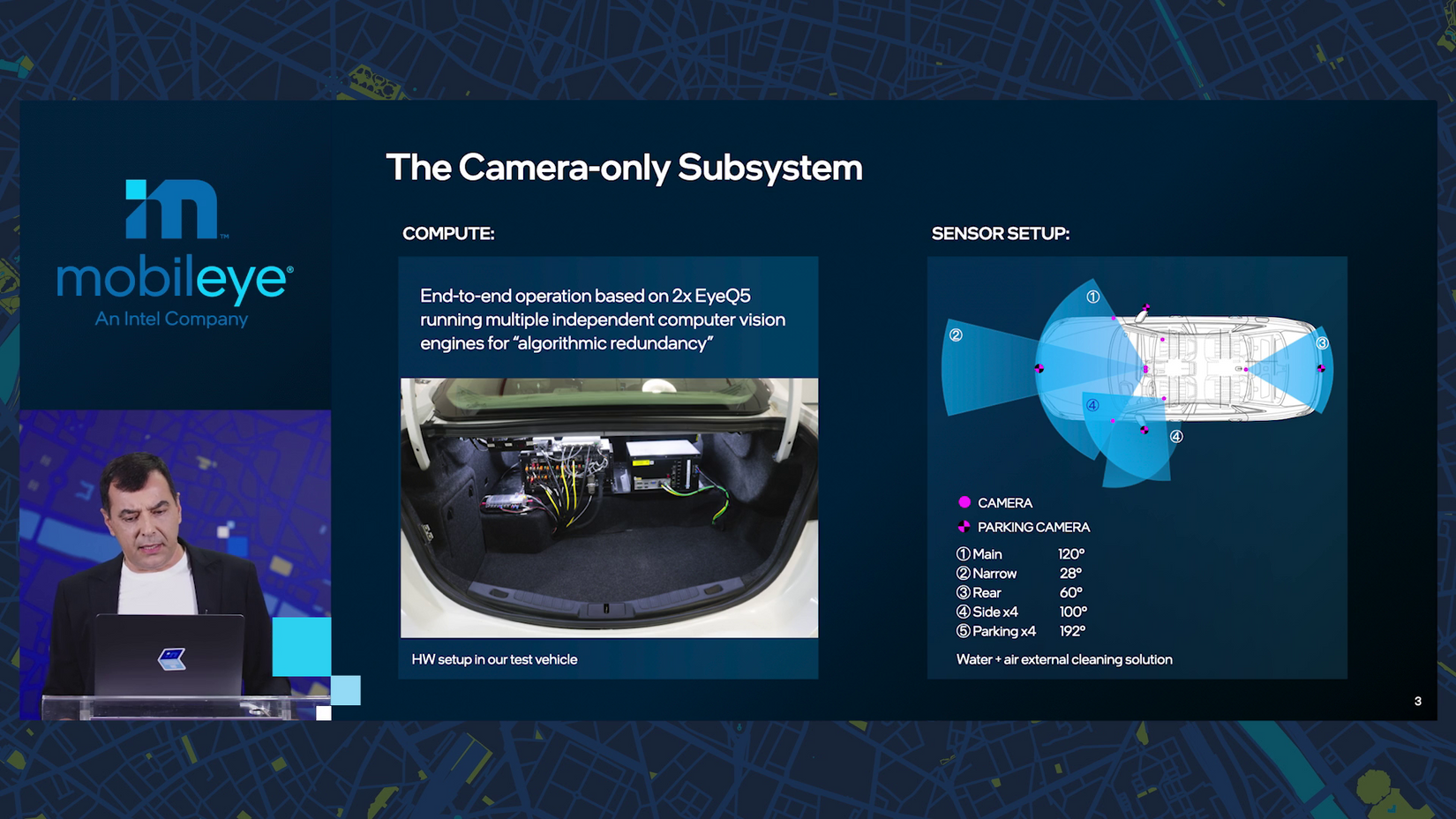

10:09 a.m.: So, how do you build an AV with nothing but a few common 8-megapixel cameras? (Tiny camera trivia: the first iPhone camera to reach 8MP was way back in 2011 with the 4S.)

A rare look inside.

Start with two EyeQ5 chips and add seven long range cameras and four parking cameras, all with varying fields of view. This “gives us both long-range and short-range vision perception, no radars, no lidars.”

In our first rides of the hour, Amnon shows clips of autonomous testing in Jerusalem, Munich and Detroit. Mobileye’s in the motor city!

“What you see here is complex driving in deep urban settings, and it’s all done by the camera subsystem.”

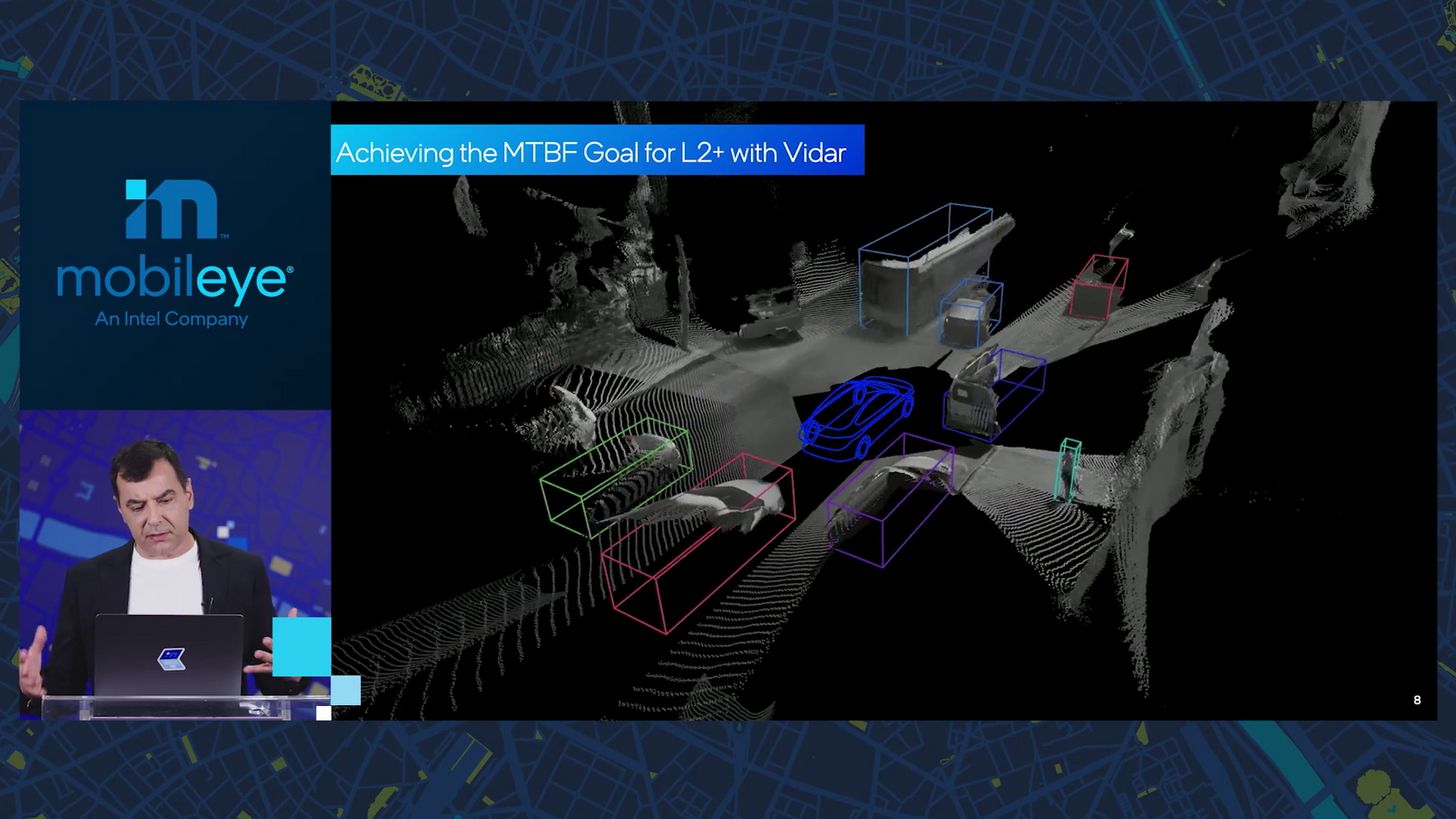

10:10 a.m.: Mobileye calls this multi-camera system “vidar” for visual lidar. It builds an instantaneous 3D map and then uses lidar-like algorithms to detect road users.

Mobileye then duplicates this stream to perform different algorithmic functions in parallel — an example of “internal redundancies” in the system.

10:12 a.m.: This camera-only self-driving system isn’t just going into future AVs — Mobileye is making it available now for driving assist as SuperVision. “It can do much more than simple lane-keeping assist.”

“The first productization is going to be with Geely,” Amnon says, launching in Q4 of 2021. “We’re not talking about something really futuristic — it’s really around the corner.”

10:14 a.m.: When it comes to testing and expanding to new countries, “The pandemic actually allowed us to be much more efficient.” Wuzzat?

In Munich, two non-engineer field support employees got things up and running with remote help in just a couple weeks (normally performed by a couple dozen engineers). “It gave us a lot of confidence that we can scale much, much faster.”

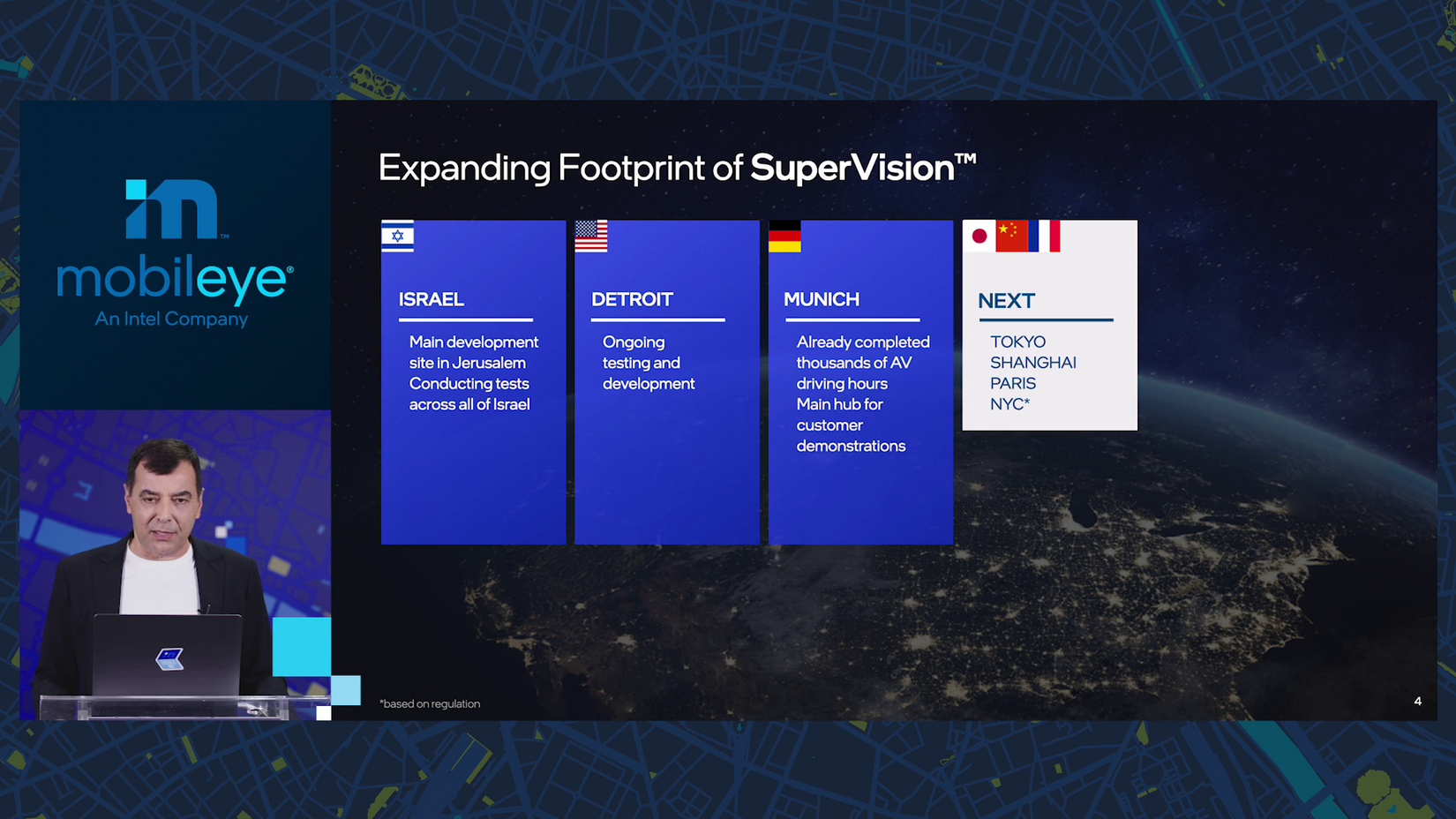

Mobileye customers have since taken more than 300 test drives (well, rides) in Munich, and now Mobileye is expanding testing to Detroit, Tokyo, Shanghai, Paris and as soon as local regulations allow it, New York City.

“New York City is a very, very interesting geography, driving culture, complexity to test,” Amnon says. “We want to test in more difficult places.”

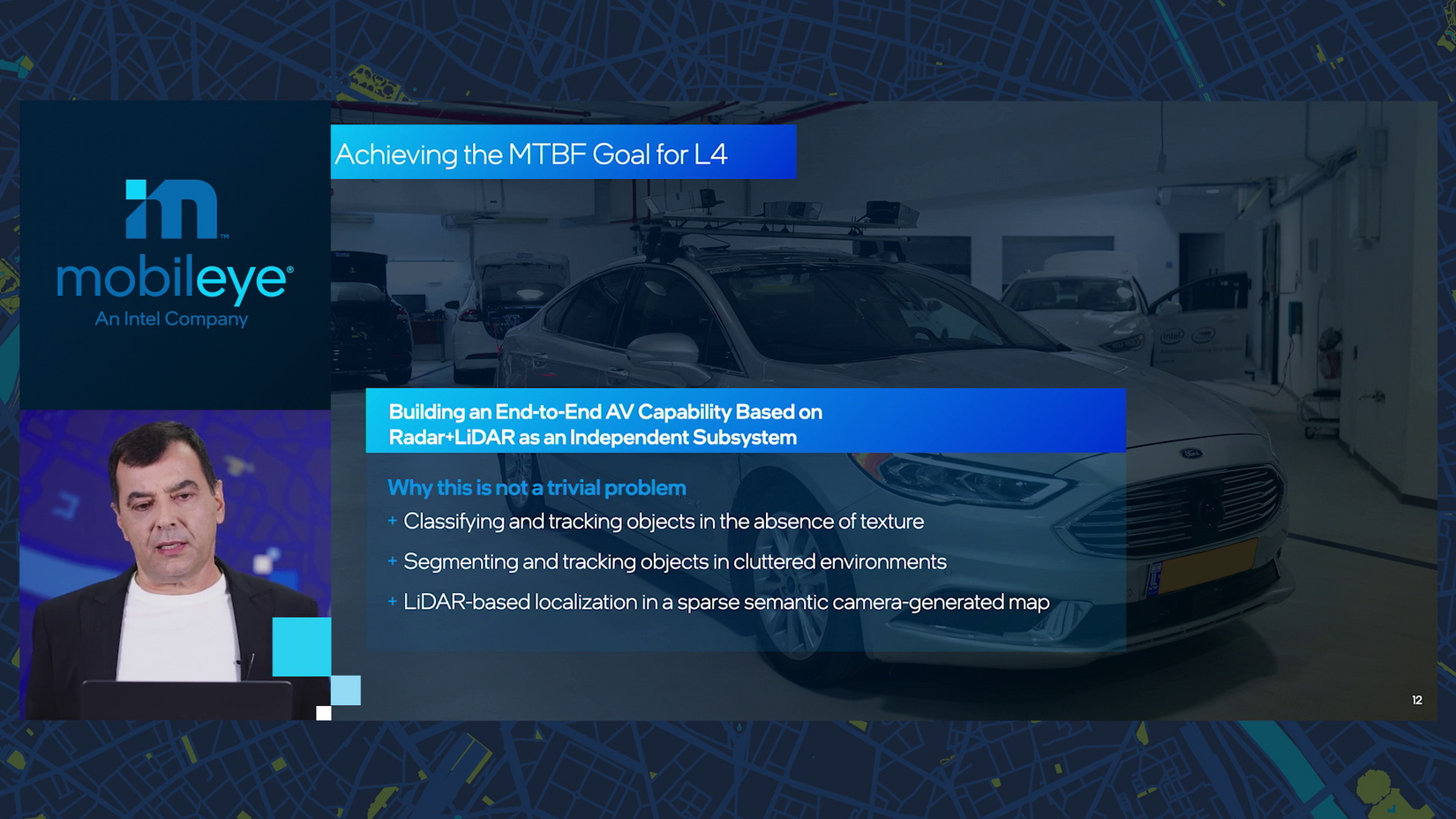

10:16 a.m.: Sub-system two — hat-tip to Daniel Kahneman, another famous Israeli professor —uses only lidar and radar.

While most other AV companies rely on the combination of cameras and lidar, “we excluded cameras from this subsystem.” This “makes life a bit more difficult.”

But the result is that much higher mean time between failures needed to remove the driver. “Everything is done at the same performance level as the camera subsystem that we have been showing, and here there are no cameras at all.”

10:18 a.m.: Time for the first deep dive: maps.

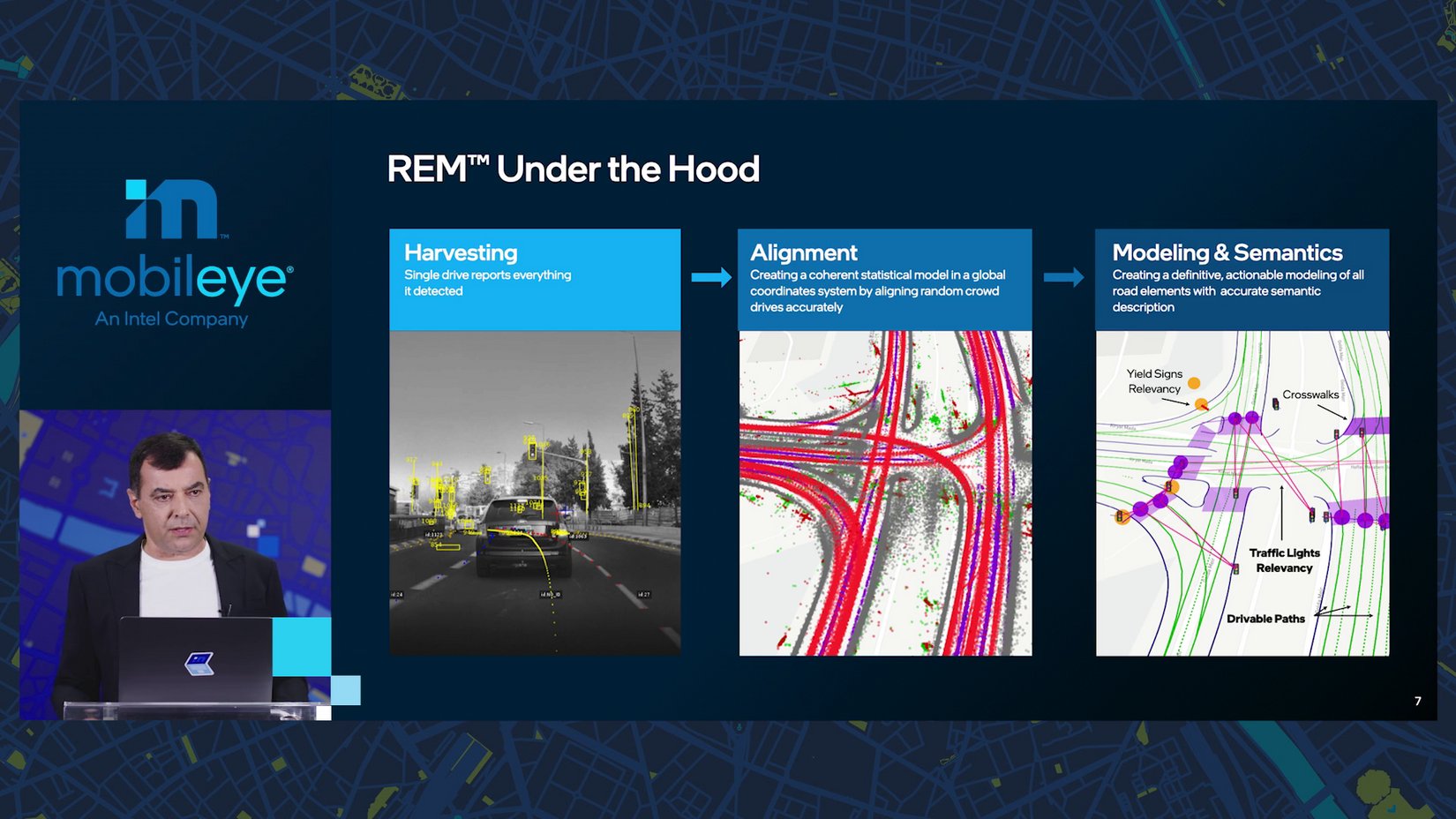

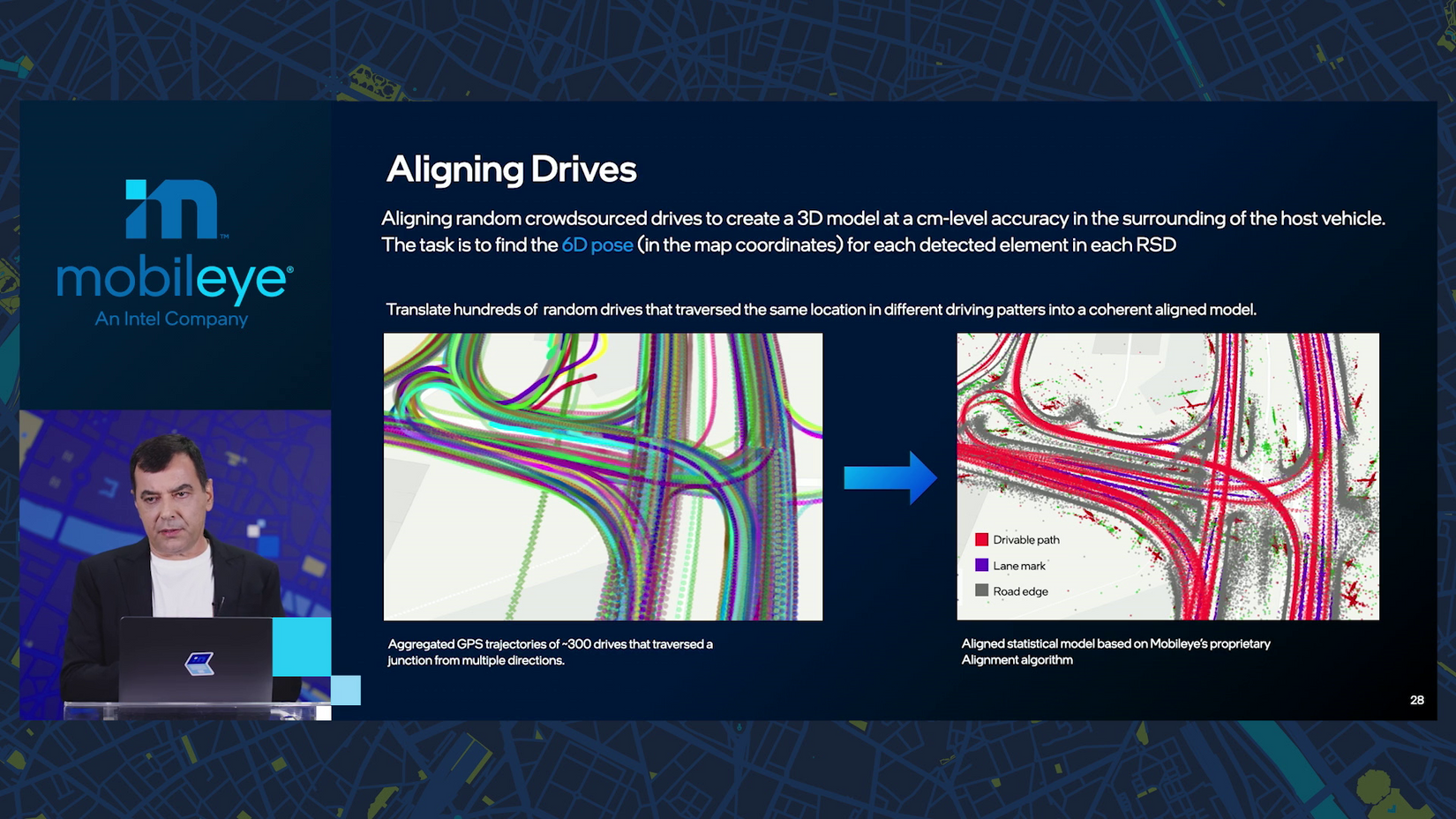

A quick history: in 2015, Mobileye announced crowdsourced mapping. In 2018, cars from BMW, Nissan and Volkswagen started sending data — not images but privacy-preserving “snippets, lanes, landmarks” that only add up to 10 kilobytes per kilometer.

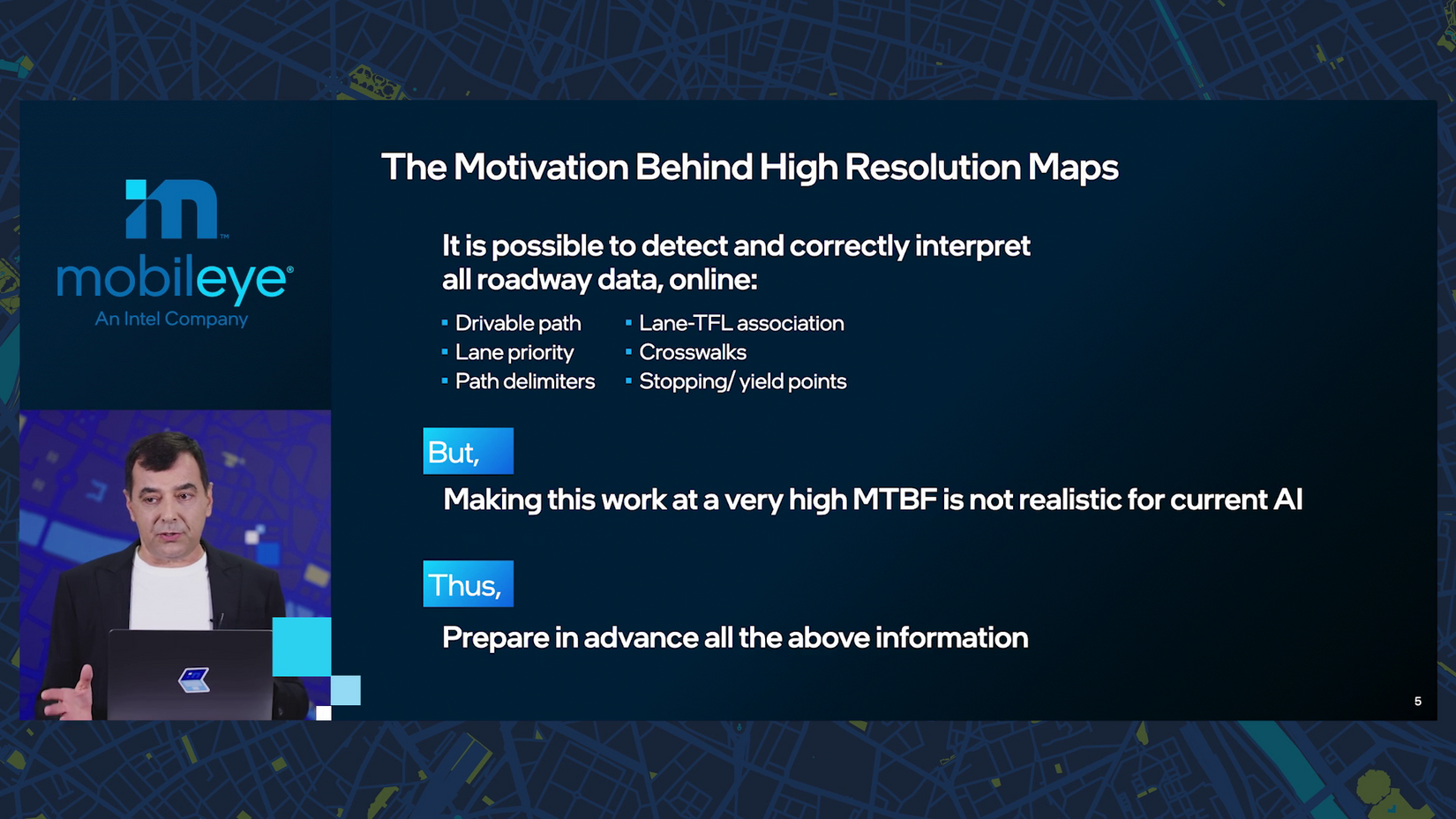

10:20 a.m.: Why are these ultra-detailed maps needed? “The current state of AI can detect road users at fidelity approaching human perception,” Amnon says. But do that and understand the many complexities of the driving environment — lanes, crosswalks, signals, signs, curves, right-of-way, turns — “right now it’s not realistic.”

The big challenge with building AV maps is scale. Covering a city or two, “that’s fine,” but supporting millions of cars with driving assist means “they need to drive everywhere.”

10:22 a.m.: This will be on the test: Amnon predicts it’ll be “2025, in which a self-driving system can reach the performance and the cost level for consumer cars.” Adjust your calendar accordingly.

10:24 a.m.: Maps also need to be fresh — today Mobileye’s maps are updated monthly but the eventual target is “a matter of minutes.”

And maps need “centimeter-level accuracy.”

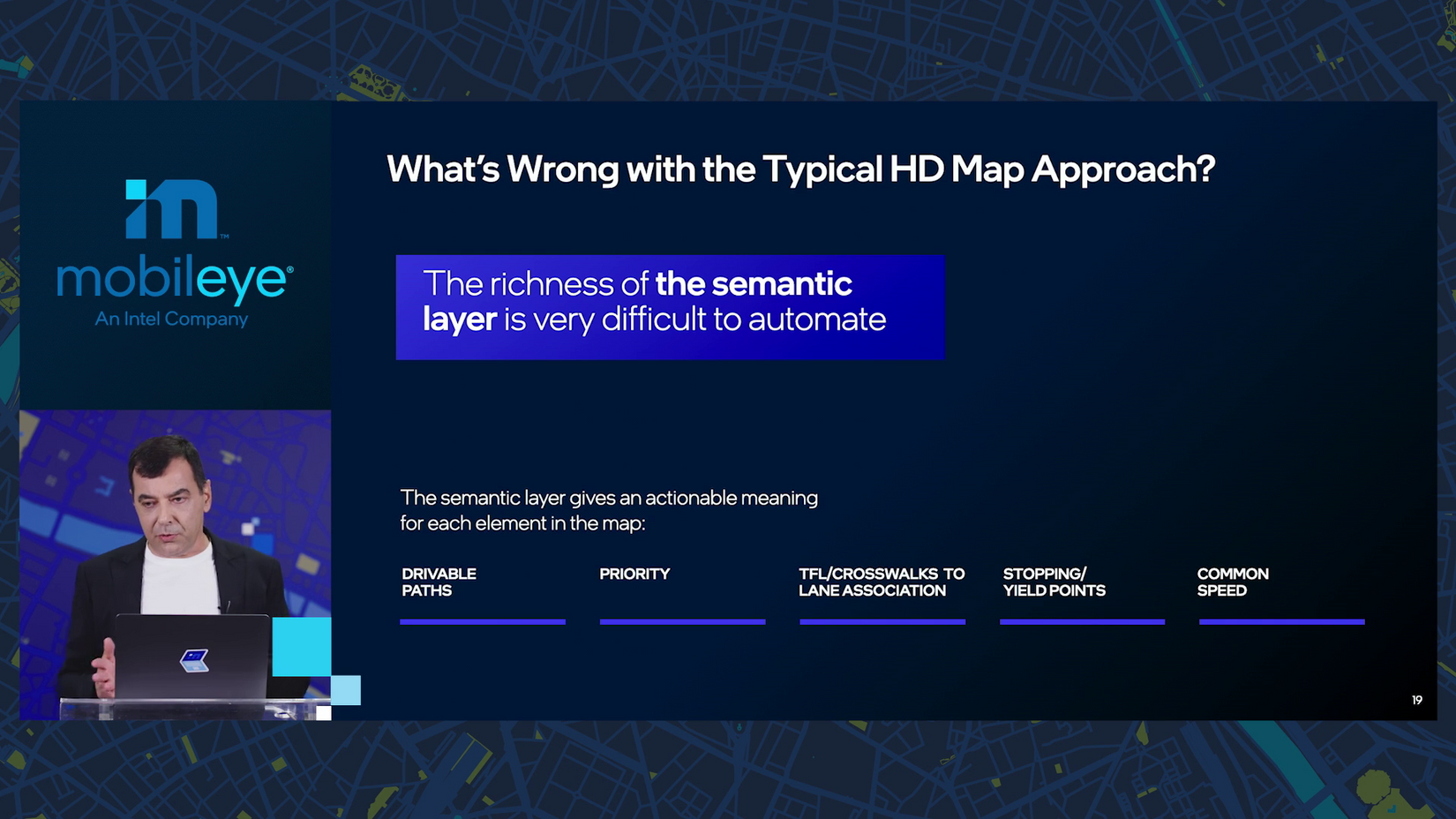

10:25 a.m.: The problem with maps built by driving specialized cars with huge 360-degree lidar sensors and cameras? The precision is overkill and the semantics is absent.

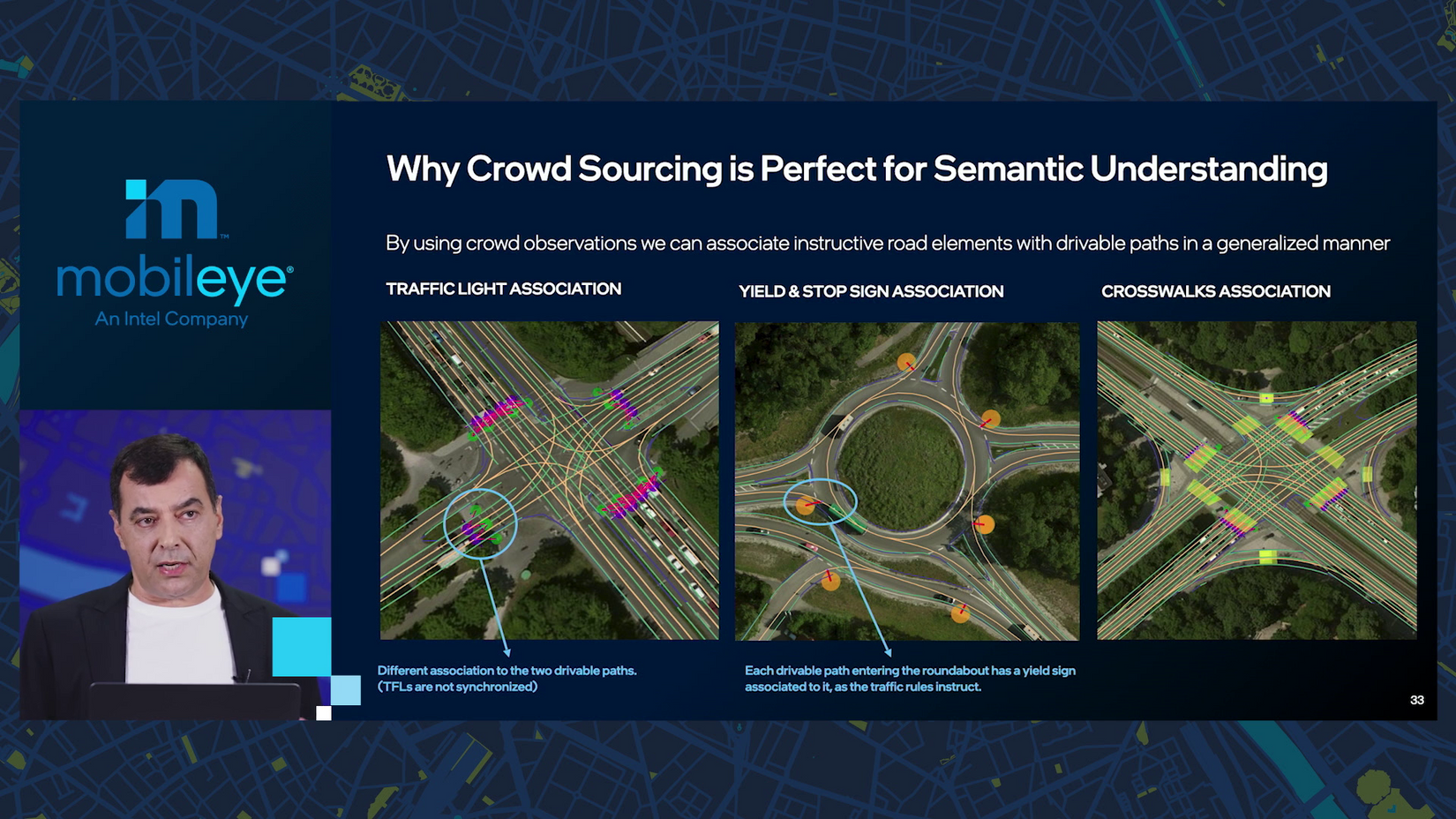

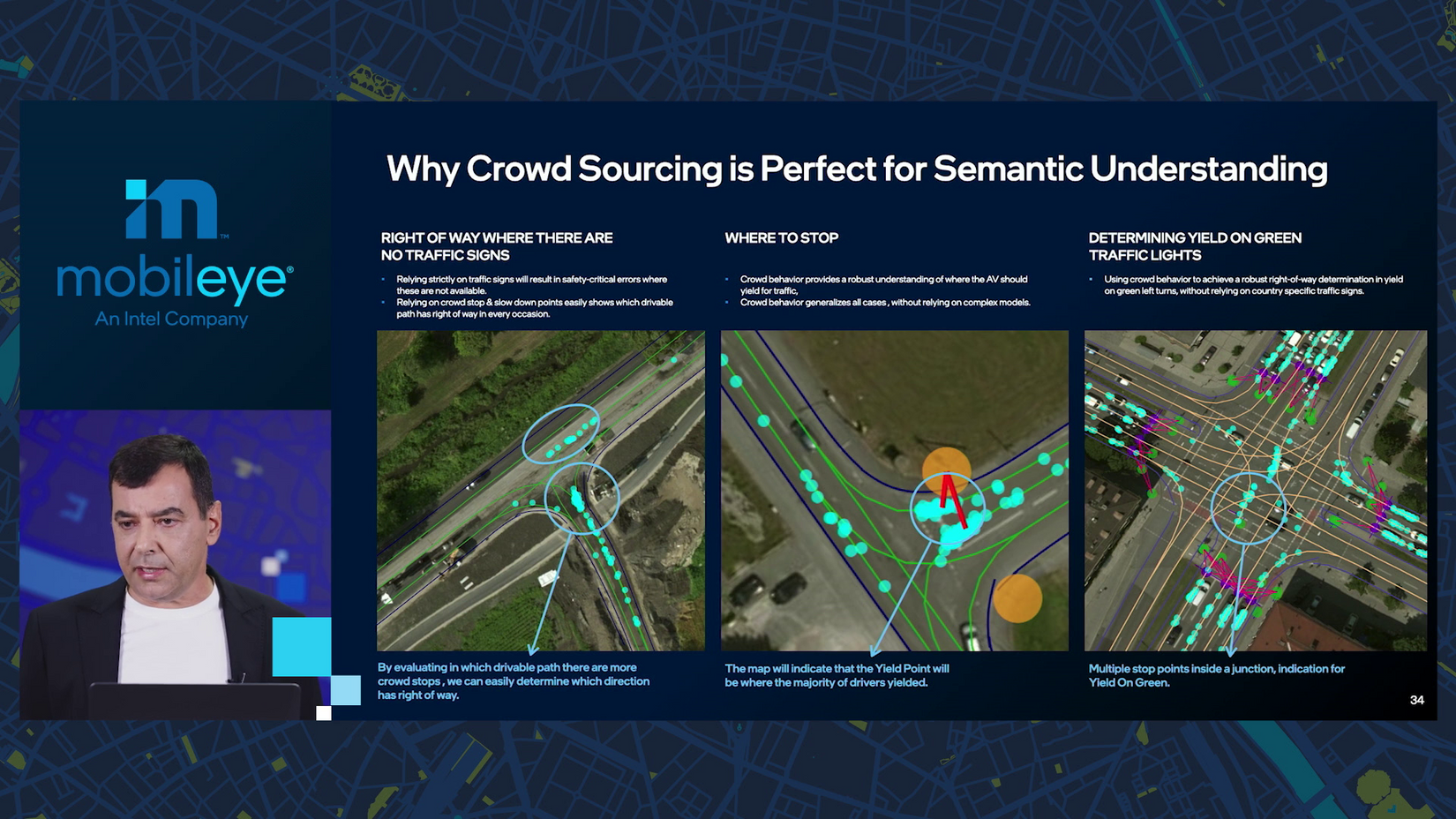

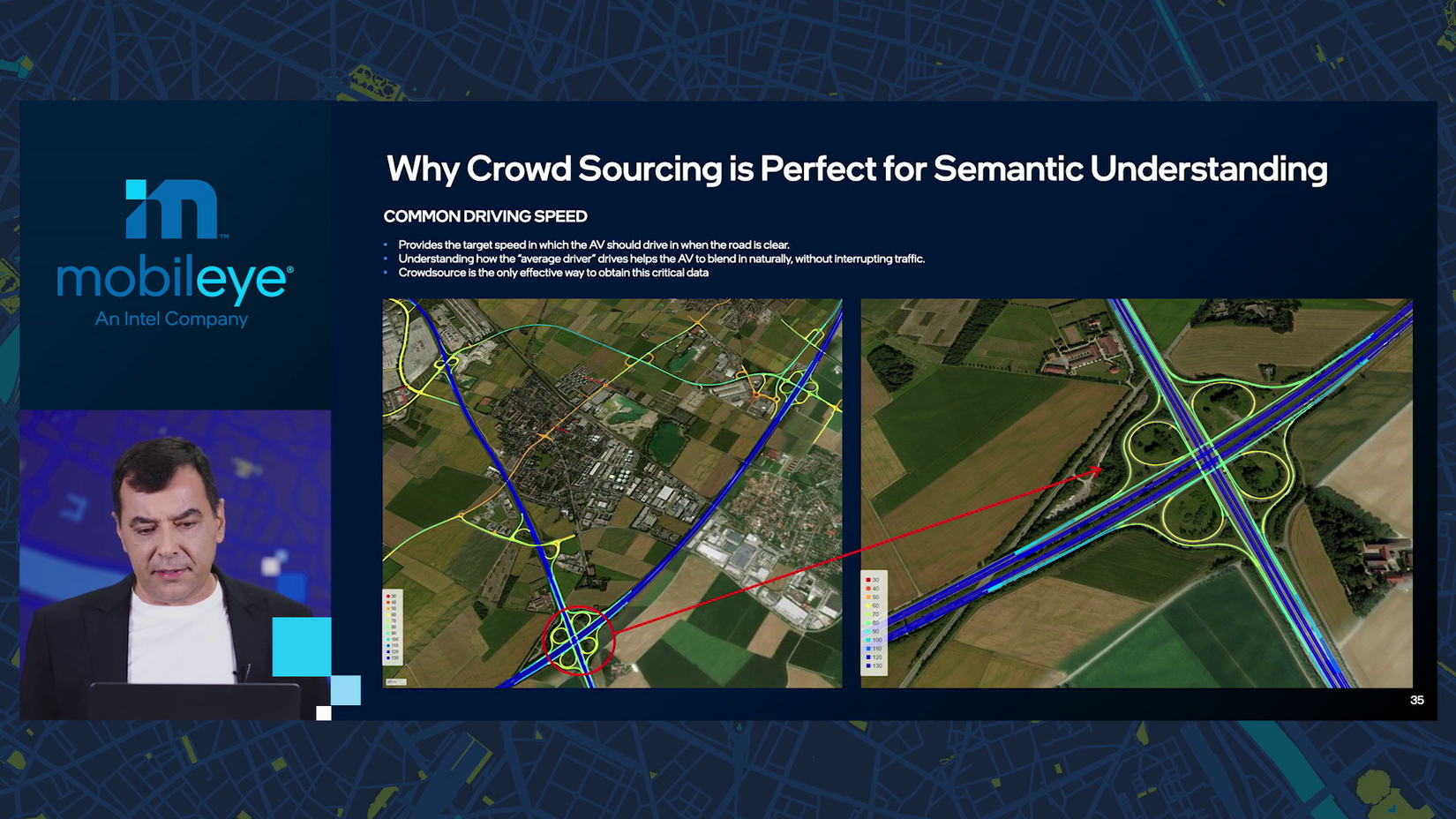

Semantics? “We divide semantics into these five layers: drivable paths, lane priority, association between traffic light and crosswalks to lane association, stopping and yield points and common speed.”

Common speed is how fast all the humans are going, “in order to drive in a way that doesn’t obstruct traffic.” Or drive people crazy.

10:26 a.m.: “Now these semantic layers are very, very difficult to automate. This is where the bulk of the non-scalability of building high-definition maps comes into.”

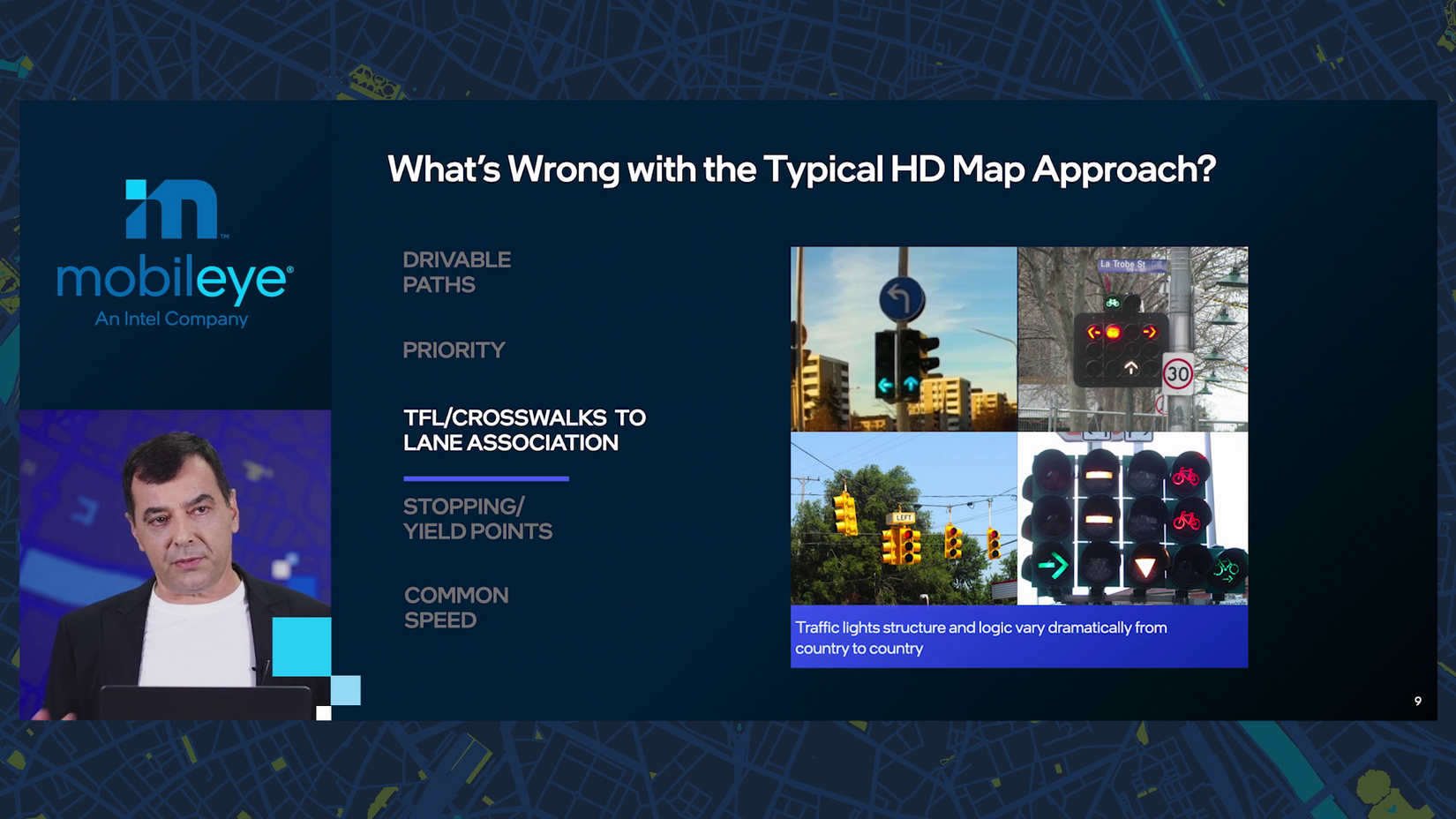

10:27 a.m.: In short: “it’s a zoo out there.”

10:29 a.m.: And that’s why you need not a “high definition map,” but rather an “AV map” — with local accuracy and detailed semantic features.

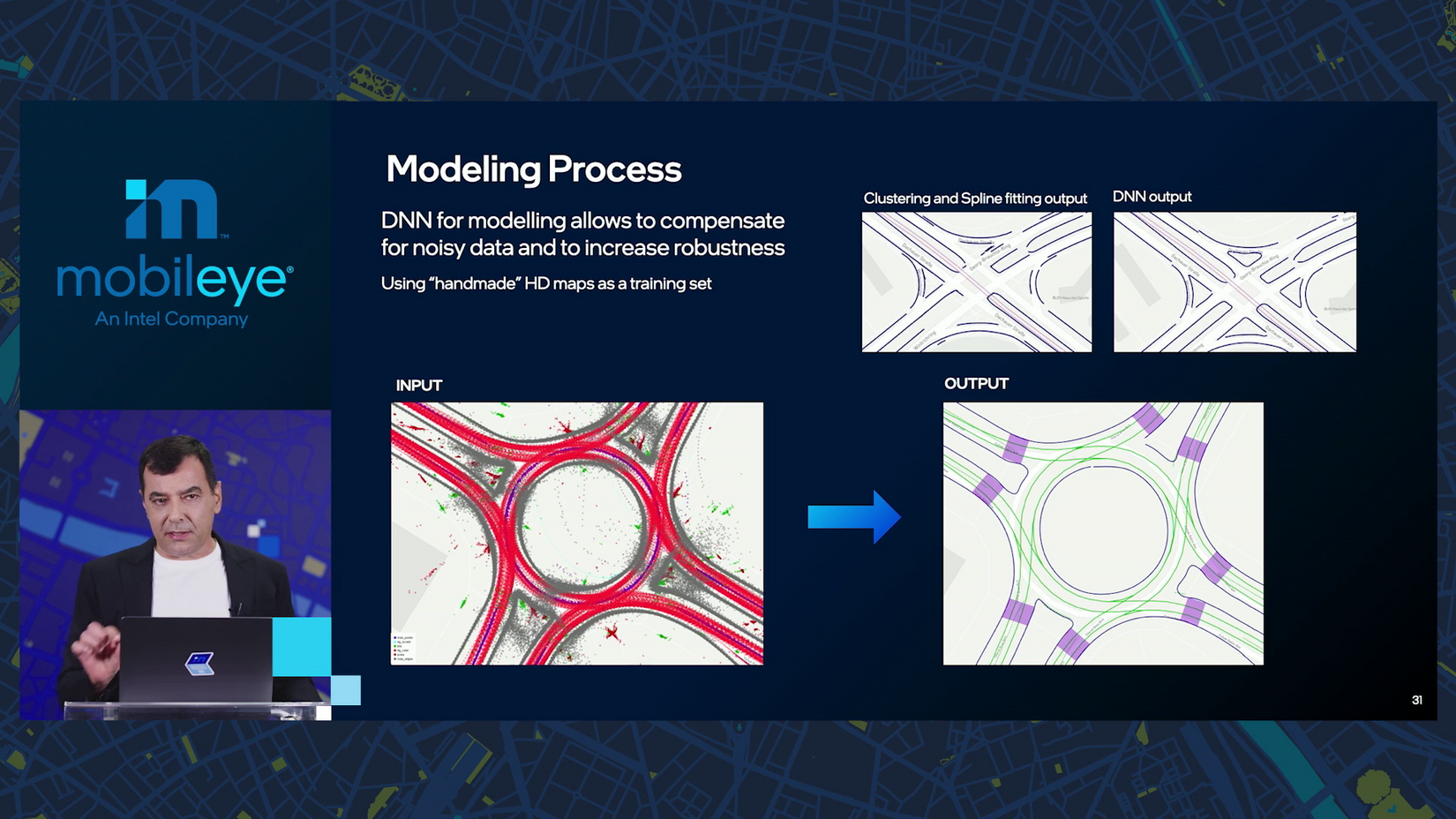

10:30 a.m.: The steps to build an AV map begin with “harvesting” what Mobileye calls “road segmented data.” It’s sent to the cloud for the “automatic map creation that we have been working on for the past five years.” (This is the milestone Amnon started the drumroll for earlier.)

And finally there’s localization: where is the car, right now, on the map?

10:31 a.m.: Amnon walks through how data is combined from hundreds or thousands of cars to identify drivable paths, signs and landmarks.

10:33 a.m.: What if there are no lane markings? The crowdsourced data reveals details like multi-path unmarked lanes and critically, the road edge.

10:34 a.m.: A big busy roundabout with several pedestrian crossings? It makes me sweat but with enough data, Mobileye gets “very close to perfection,” Amnon assures.

10:35 a.m.: Which light goes with which lane?! Still not a problem.

10:37 a.m.: Amnon is cruising through a number of scenarios where expected driving behavior is set not by signs or markings but rather “can be inferred from the crowd.” It’s accurate and my goodness, must’ve saved years of Mobileye engineers’ time.

10:38 a.m.: I’ve joked that AVs should have passenger-chosen driving modes, ranging possibly from “Driving Miss Daisy” to “Bullitt.” What’s great about Mobileye’s maps, though, is they include how fast people normally go on every stretch of local road, “very important in order to create a good and smooth driving experience.”

10:39 a.m.: The numbers are bonkers: “we have about eight million kilometers of road being sent every day.”

“In 2024, it’s going to be one billion kilometers of roads being sent daily, so we are really on our way to map the entire planet.”

10:40 a.m.: Deep dive part 2! Radar and lidar.

“Why do we think that we need to get into development of radars and lidars? First let me explain that.”

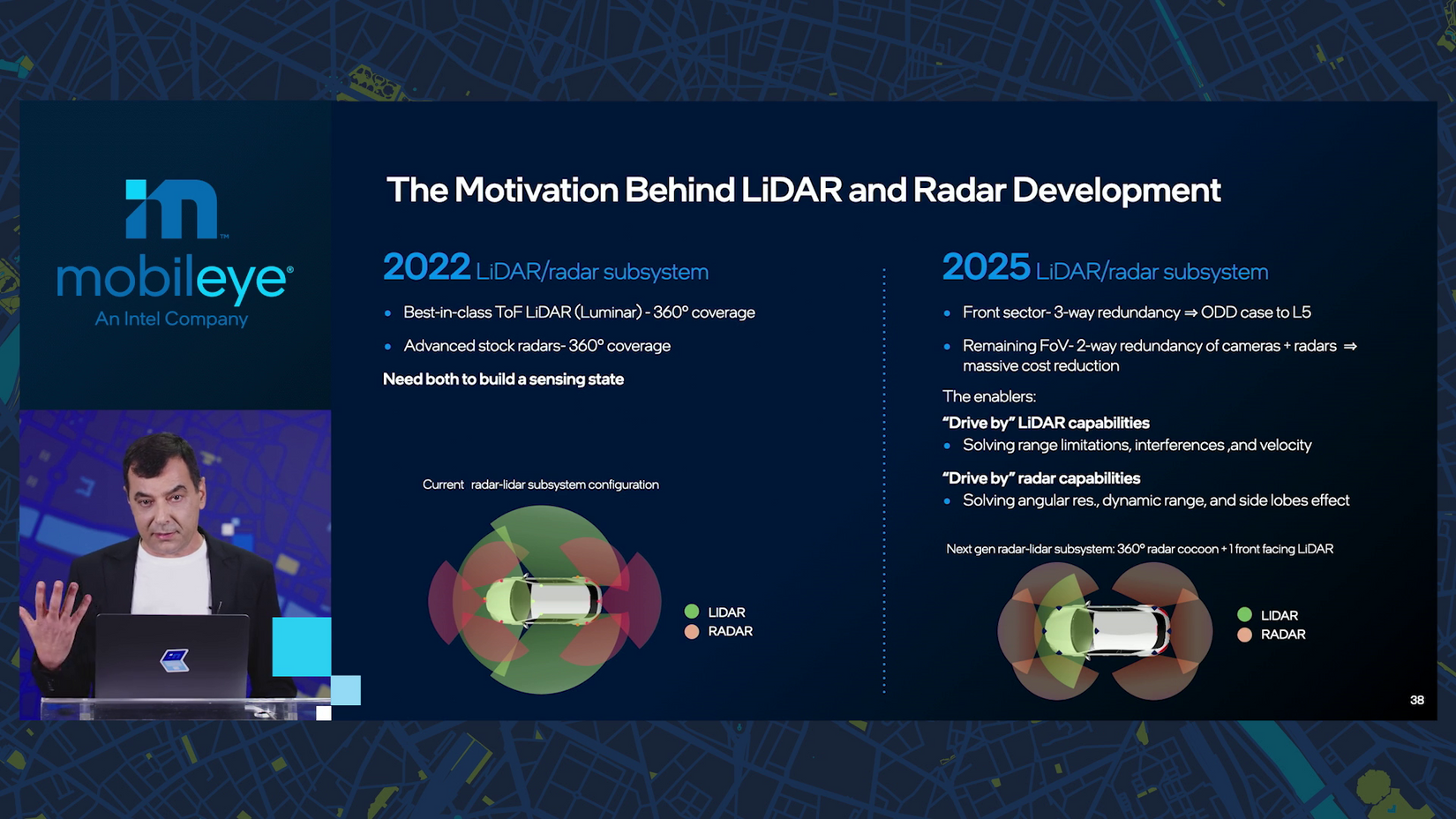

For 2022, “we are all set,” Amnon says, to use Luminar lidar and “stock radars” that are sufficient for end-to-end driving with a high MTBF (speaking of MTB, Amnon does enjoy mountain bikes and motorcycles — he’s a fan of wheels of all shapes and sizes).

10:41 a.m.: For 2025, though, Mobileye wants lower costs — to reach “this level of consumer AV” — and more capability, “closer to level 5” (here’s a handy graphic of the six levels). “It’s contradictory.”

The goal is not two-way redundancy but three, where radar and lidar can each function as standalone systems like cameras. But “radar today doesn’t have the resolution or the dynamic range.”

10:42 a.m.: We believe radars need to evolve to “imaging radar” that could stand alone, Amnon says. “This is very very bold thinking.”

10:43 a.m.: Radar is 10 times cheaper than lidar. (Point of reference: the first iPhone with a lidar sensor is the 12 Pro just introduced in Q4 of 2020.)

So, we want to drastically reduce the cost for lidars and “push the envelope much further with radars.” Conveniently, “Intel has the know-how to build cutting-edge radars and lidars.”

10:44 a.m.: Time for a quick seminar on radar. I studied engineering but it was still handy for me to dig up a reminder: radar and lidar are both used to identify objects. Radar uses radio waves to do so, lidar uses infrared light from lasers. Bats and dolphins use sound — that’s sonar.

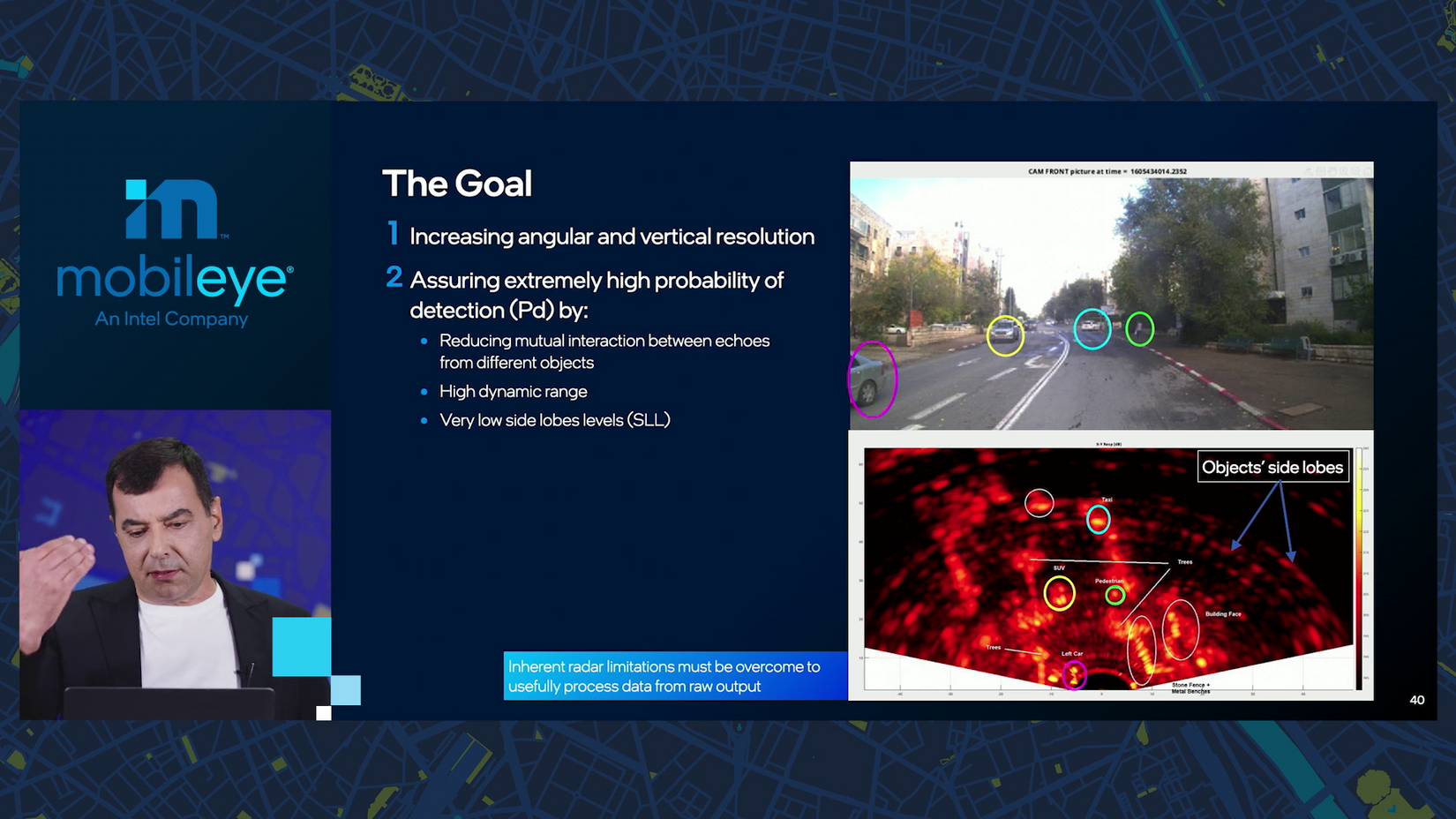

What the cars use is “software-defined imaging radar.” The signal it receives from an object is not a set of hard points as from a camera or lidar but rather “all over the place.” Separating noise from the actual object “is very, very tricky.”

The echoes, or signals reflected back, contain noise called “side lobes.” A better radar should have both more resolution (able to detect small objects) and more dynamic range through higher “side lobe level” (more accuracy, through increased “probability of detection”). And thus, it should produce an image as useful as a lidar or camera.

Yep, also on the test — stay frosty!

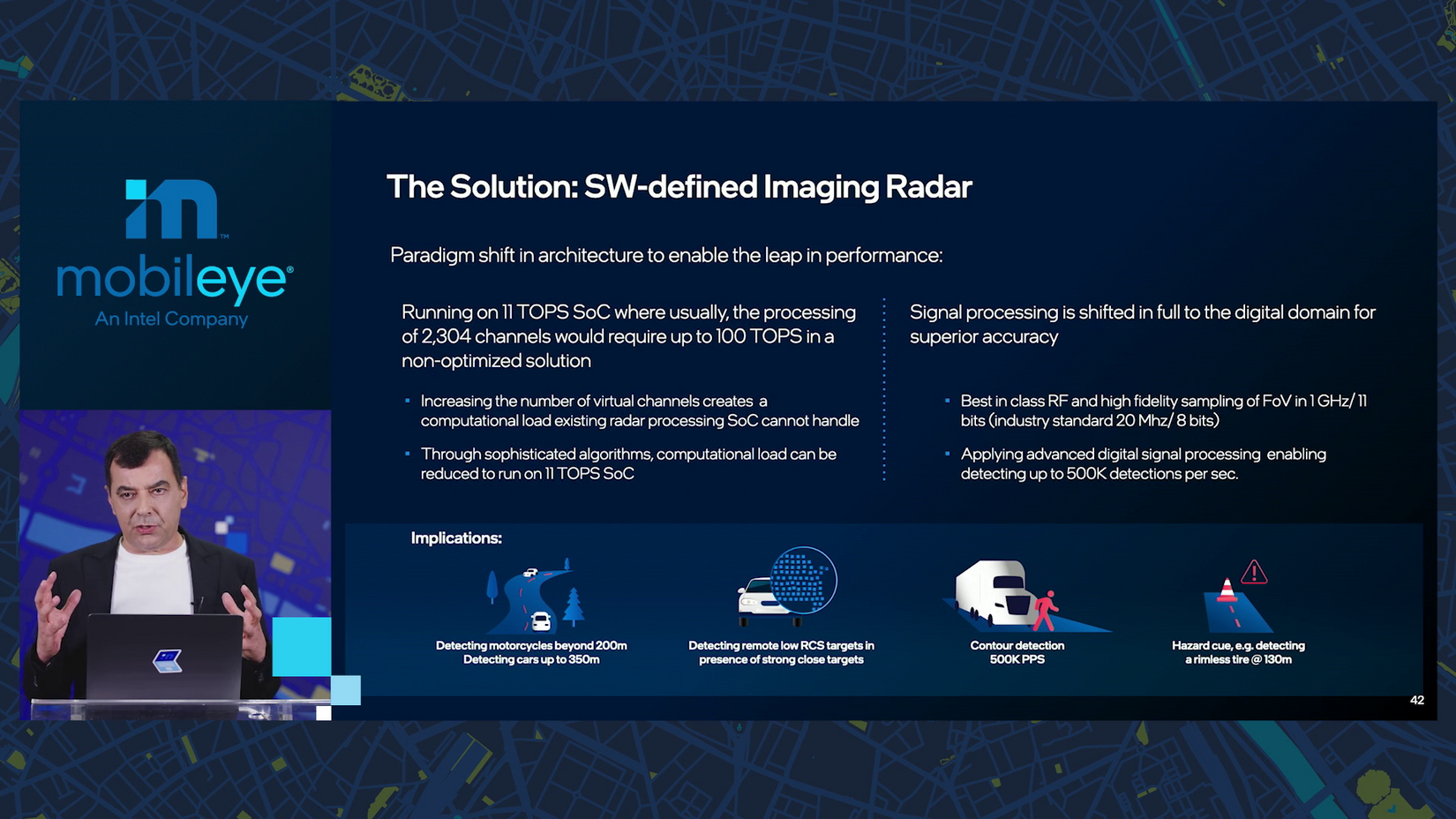

10:45 a.m.: Current radars have 192 virtual channels thanks to 12 by 16 transmitters and receivers. The goal is “much more massive”: 2,304 virtual channels based on 48 by 48 transmitters and receivers. This brings “significant challenges…computational complexity increases a lot.”

10:46 a.m.: For the dynamic range, side lobe levels should rise from 25 dBc to 40. The scale is “logarithmic, so it’s night and day, basically,” to make that jump.

10:47 a.m.: What can better radars do? Let’s see what they can see.

As a motorcycle rider myself, this example puts a smile under my helmet.

“We want our radar to be able to pick up this motorcycle, even though there are many, many more powerful targets that have a much higher RCS signal.” RCS is “radar cross section.”

10:48 a.m.: The higher sensitivity means a lot more data to process, not suitable for “a brute force, naïve way.” Advanced digital filters, however, can be “more accurate and powerful than what you can do in an analog domain.”

10:49 a.m.: In another example, the better radar can identify pedestrians in a scene where “visually, you can hardly see them.”

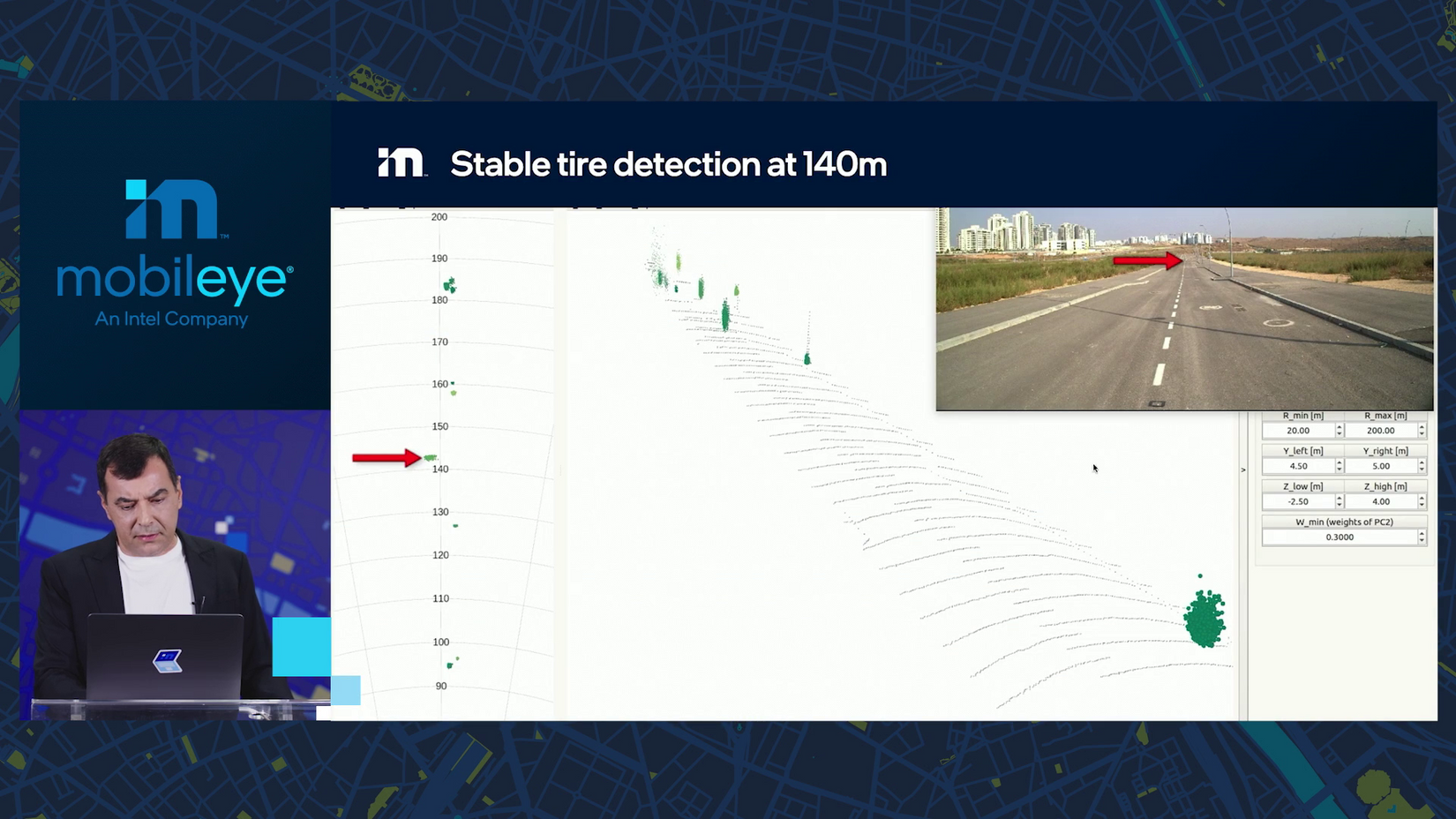

10:50 a.m.: Here the radar detects an old tire on the road, 140 meters away. “We want the radars also to be able to detect hazards, and hazards could be low and small and far away.”

10:51 a.m.: The timeline for these radars? “2024, 2025 in terms of standard production.”

10:52 a.m.: Sensor seminar number two: lidar.

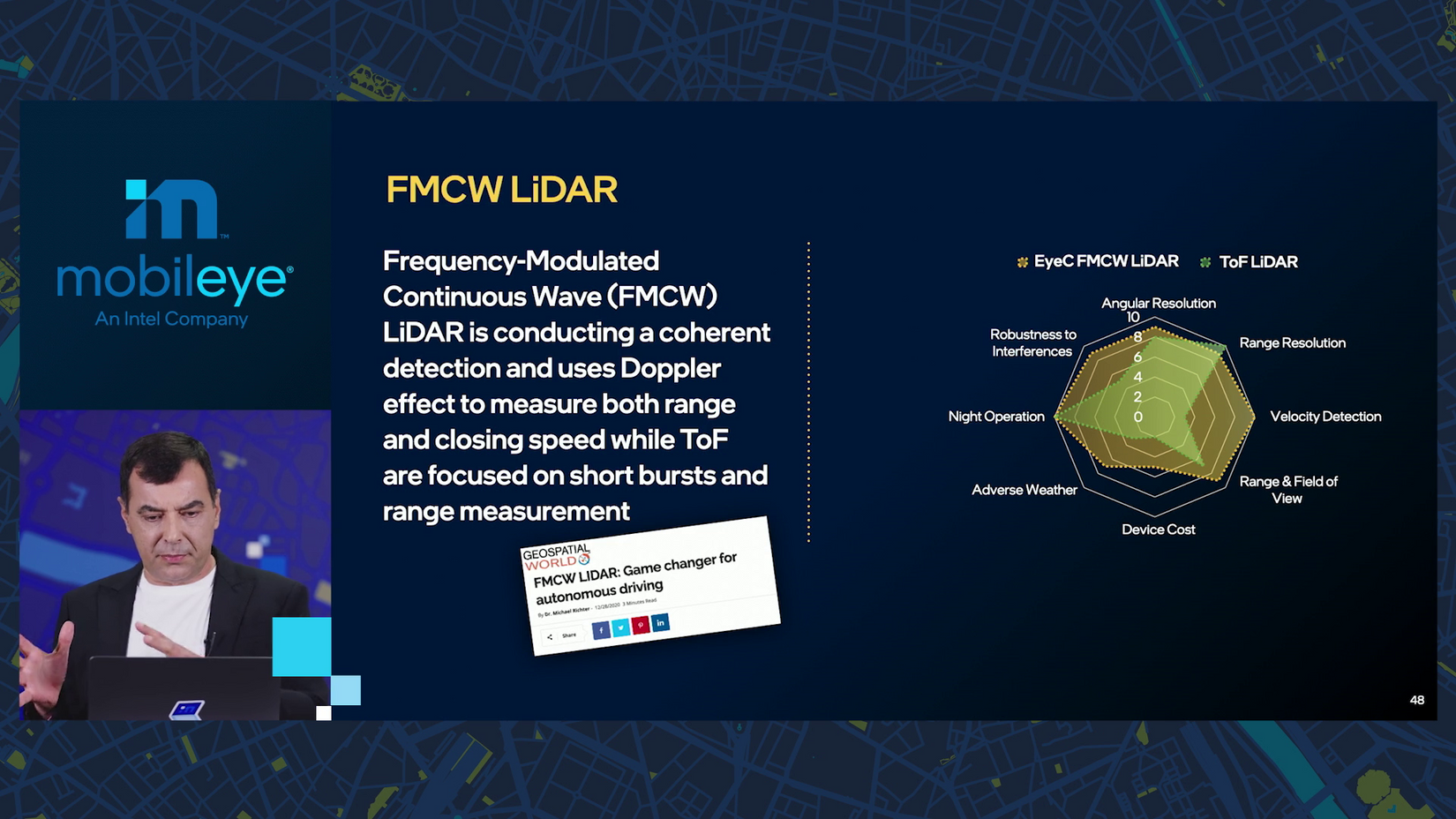

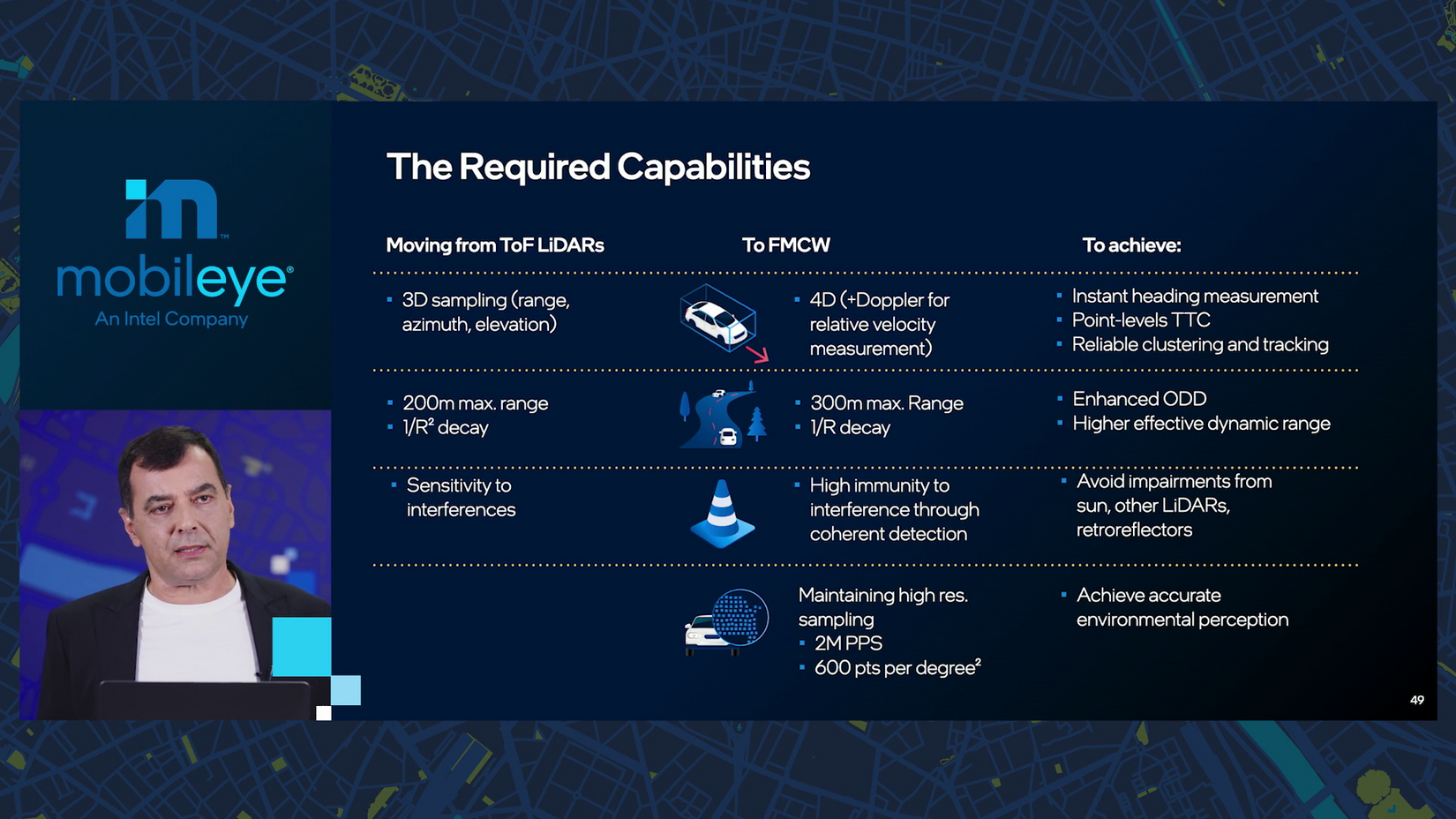

Current lidars use a method called “time of flight,” providing 3D data —objects’ size, shape and distance (this basic Wikipedia graphic shows how they “see”).

But a new kind of lidar — frequency-modulation coherent wave, or FMCW — is “the next frontier.”

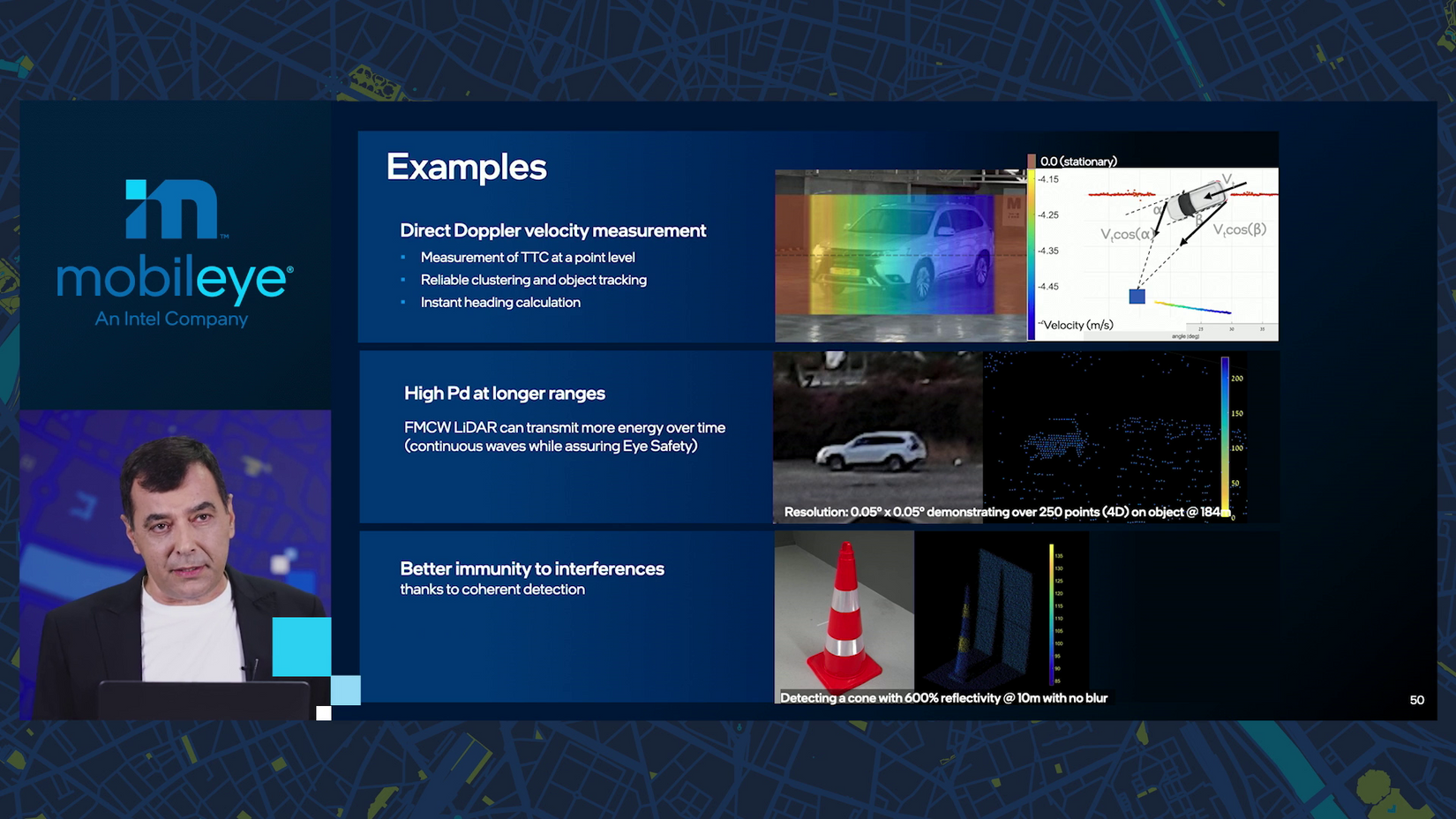

10:53 a.m.: To compact a lot of detail, FMCW lidar has many advantages and it’s also 4D — it can capture the velocity of objects.

10:54 a.m.: Amnon shows how FMCW lidar captures velocity, has longer range and is more resilient.

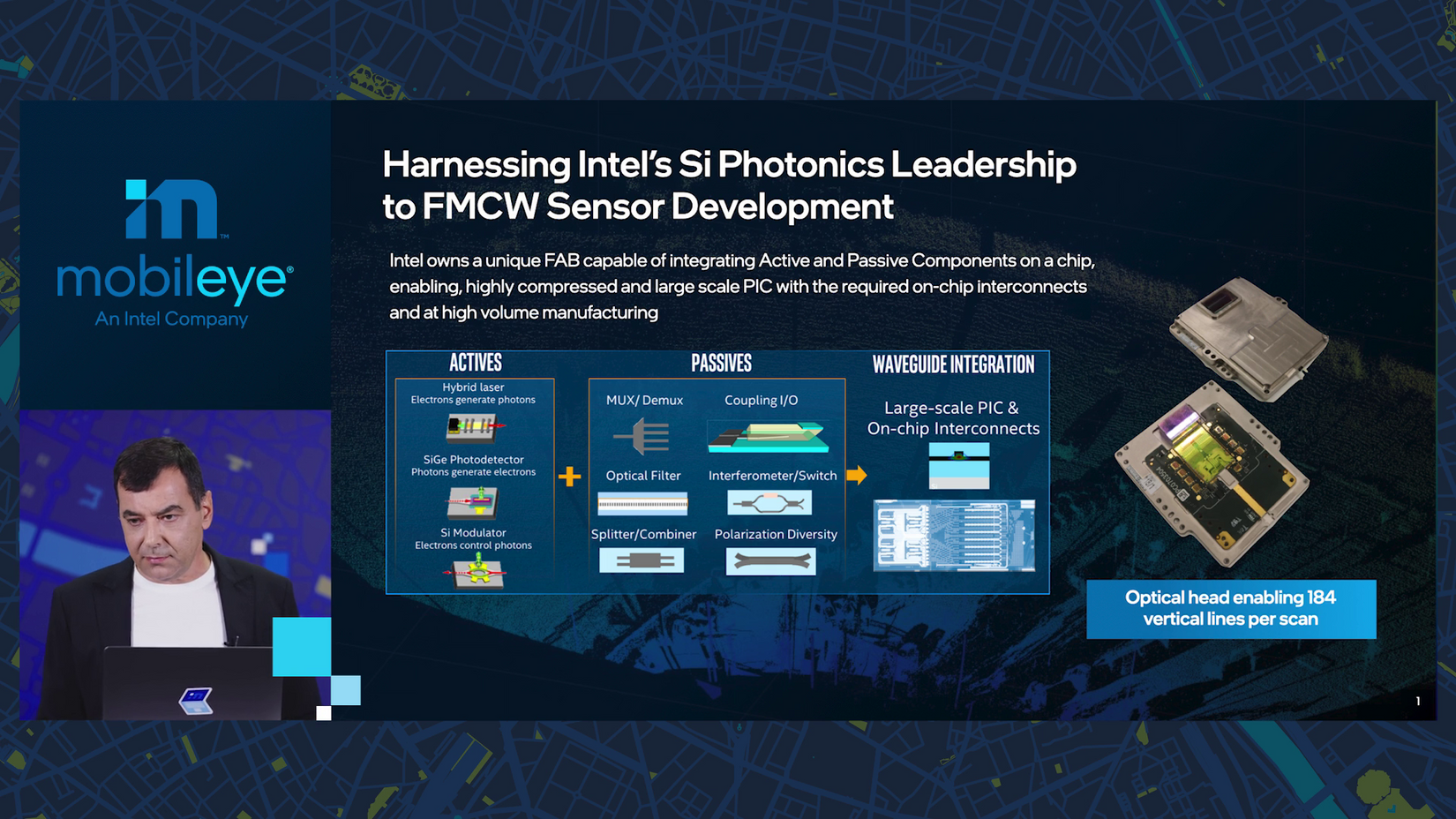

10:55 a.m.: What does Intel bring to lidar? Silicon photonics. Intel is “able to put active and passive laser elements on a chip, and this is really game-changing.”

Amnon is showing a photonic integrated circuit with “184 vertical lines, and then those vertical lines are moved through optics.”

The ability to fabricate this kind of chip is “very rare,” Amnon says. “This gives Intel a significant advantage in building these lidars.”

10:56 a.m.: And that’s that for deep dives, “I’m now going back to update mode.”

10:57 a.m.: The RSS safety model is gaining momentum worldwide. It’s being applied to and influencing standards with IEEE, ISO, the U.S. Dept. of Transportation and the U.K. Law Commission.

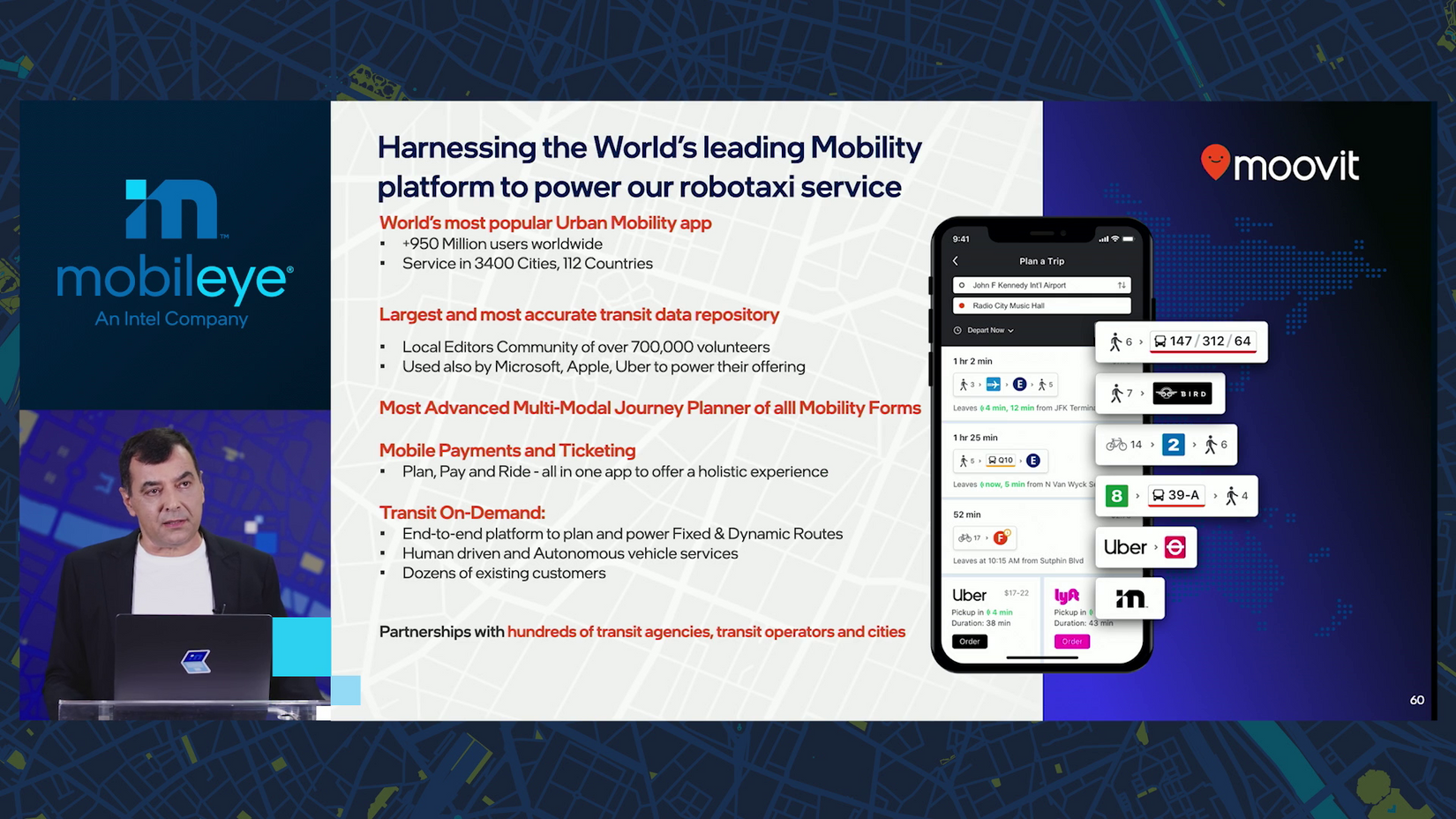

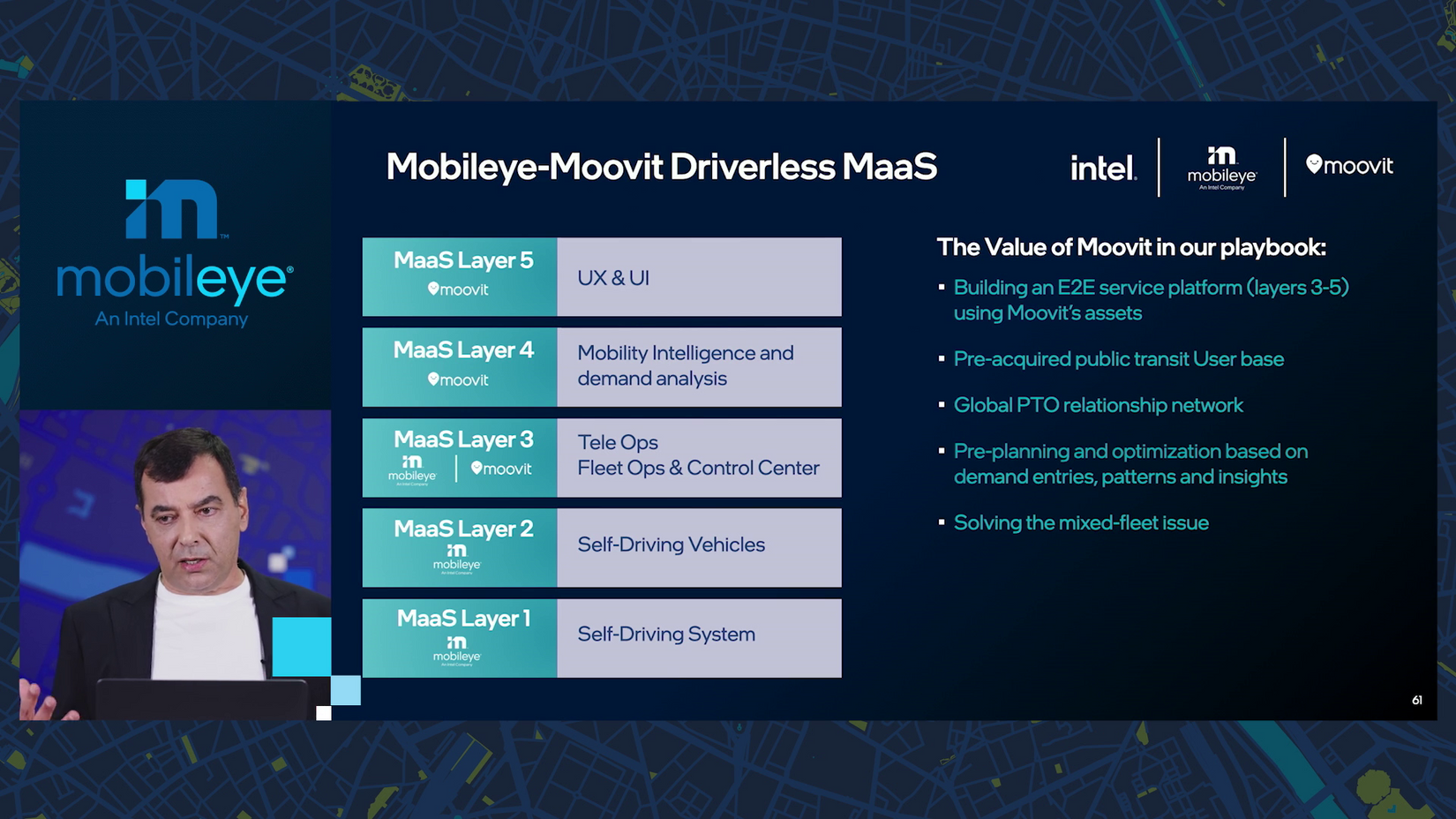

10:58 a.m.: Mobileye is building not only vehicle- and ride-as-a-service, but mobility-as-a-service (MAAS), too. That’s where Moovit comes in.

10:59 a.m.: Moovit is “the biggest trip planner,” with 950 million users active in 3,400 cities and 112 countries. It enables MAAS by adding tele ops, fleet optimization, central control, mobility intelligence and then the user experience and payment.

11:00 a.m.: Almost there, don’t pack your stuff yet!

The first deployment will be in Israel in 2022. Following close will be France, where testing is starting next month. In Daegu City, South Korea, testing begins mid-year. And in collaboration with the Willer Group in Japan, the goal is a 2023 launch in Osaka.

“And we will expand more.”

11:01 a.m.: “I think this is all what I had to say.”

Amnon’s last word: “We would like 2025 to be the year in which we can start giving the experience of people buying a car and sitting in the back seat whenever they want and have the car drive everywhere — not just in one particular location.”

11:02 a.m.: Class dismissed! Thank you for following along — I hope you learned as much as I did.

Share article

Press Contacts

Contact our PR team