blog

|

July 31, 2025

Compound AI: The framework powering scalable autonomy

We’re in the midst of an AI revolution, and its impact on driving is profound. As these systems grow more capable, the technology that underpins them becomes the focus of intense competition.

Breaking autonomy into clearly defined components such as sensing, planning, and acting, each corresponding with a dedicated model (or models).

When it comes to AI, Mobileye takes a different approach, grounded in system design, real-world validation, and true scalability. This is embodied in our Compound AI architecture: a blend of flexible end-to-end learning and purpose-built algorithms, designed to support safe and scalable deployment. It’s more than an architectural system, but rather, a guiding framework that we believe powers our advanced ADAS and AV solutions today.

In this blog, we will break down what Compound AI at Mobileye means and how it serves as the foundation for scalable autonomy.

What is Compound AI for autonomous driving?

Compound AI refers to the integration of multiple specialized, purpose-built AI models working together to solve complex tasks that may be too challenging or inefficient for a single model to handle alone. Instead of relying on a monolithic, general-purpose AI, Compound AI takes a modular and layered approach. Each component is optimized for a specific sub-task, and the system coordinates their outputs to produce a cohesive, intelligent result.

Many autonomous driving systems today still rely on a single, end-to-end model, treating autonomy as one massive learning task. In this approach, the system attempts to learn everything from visual inputs to build a driving policy, or “photons to control.”

However, the most advanced AI results increasingly come from compound systems. These architectures are made up of multiple specialized components, each optimized for a specific function. As Berkeley AI Research (BAIR) notes, "state-of-the-art AI results are increasingly obtained by compound systems with multiple components.”

In the case of Mobileye, Compound AI follows the same principle. It breaks autonomy into clearly defined components such as sensing, planning, and acting, each corresponding with a dedicated AI model (or models).

How does Mobileye do compound AI?

As outlined in Mobileye’s A Safety Architecture for Self-Driving Systems achieving the safety and reliability needed for scalable autonomy requires a strong technical foundation. At Mobileye, that foundation is built on modular design, multiple independent sensing modalities, and layered redundancy.

This is Mobileye’s strategic approach to developing real-world AI systems using a blend of technologies and expertise. Together, they form the to scalable, real-world deployment. Each plays a critical role in how Compound AI is utilized and applied into decision making:

- Modularity: Each layer of the autonomy stack, perception, planning, and actuation is developed and refined independently. This allows engineers to focus on specific driving functions, enabling flexibility and specialization.

- Redundancy: To ensure performance in unpredictable environments, Mobileye integrates multiple sensing modalities (camera, radar, lidar), REM crowd-sourced driving intelligence inputs, diverse AI methods, and overlapping algorithmic layers. These independent paths reinforce one another and provide resilience, not only in standard driving conditions, but also in edge cases and complex scenarios.

- Abstraction: Mobileye incorporates structured logic where it matters most. The RSS™ model encodes core safety principles that don’t need to be learned. REM™ adds a crowdsourced map and driving intelligence layer that enhances real-time perception. These abstractions reduce variance while keeping systems stable and interpretable.

The software-hardware integration

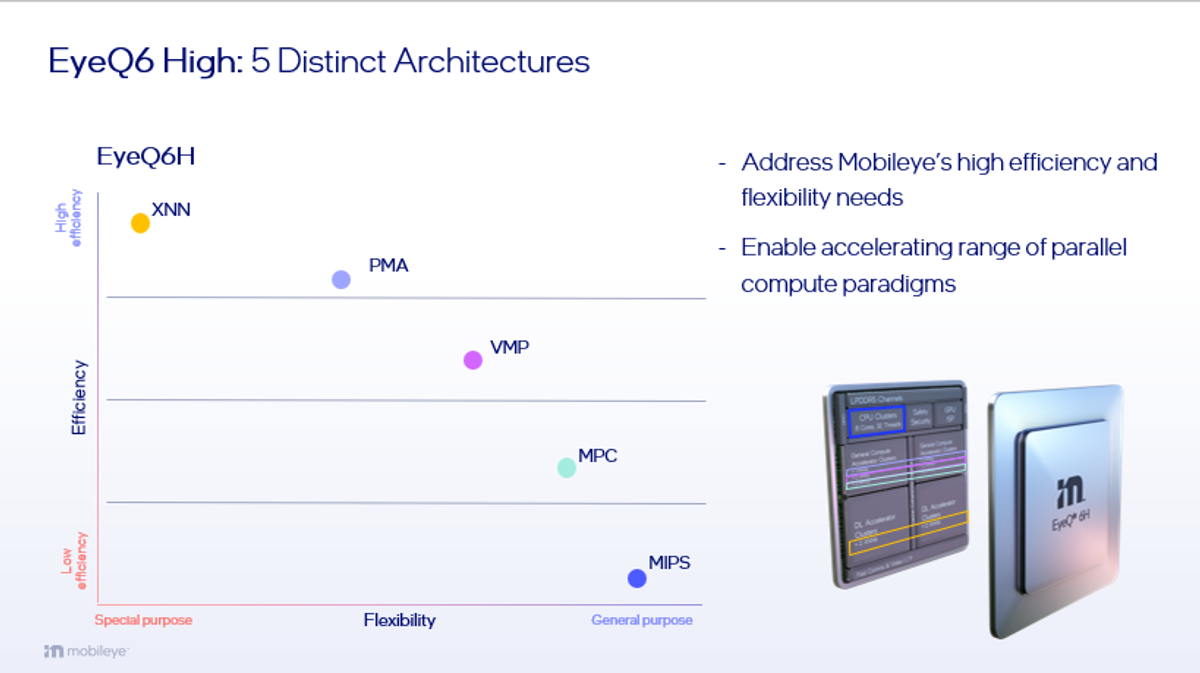

The internal design of Mobileye’s Compound AI approach reflects a careful balance between flexibility and efficiency. Mobileye’s EyeQ™6 High chip, designed for advanced automation and autonomous driving, is built with five specialized components; each designed for a different balance between flexibility and specialization. Two CPUs (MPC and MIPS) provide flexible processing power, while the XNN accelerator delivers highly efficient, targeted performance. Two additional accelerators (VMP and PMA) bridge the gap between flexibility and specialization, allowing the system to adapt dynamically to different operational needs.

The EyeQ6 High is rated at 34 TOPS (INT8)—but TOPS alone aren’t enough; context and efficiency matter more. In real-world driving workloads, the chip delivers over 1,000 frames per second on pixel-labeling neural networks, demonstrating what smart architecture can achieve in practice.

Built for the road

Compound AI, as an architectural system and a reflection of our ongoing mission to build solutions that prioritize safety, efficiency, and scalability, serves as the foundation for a spectrum of autonomous systems. These range from advanced driver-assistance to fully autonomous platforms like Mobileye Chauffeur™ and Mobileye Drive™, designed for eyes-off and fully driverless mobility.

As AI continues to reshape mobility, the question is no longer whether autonomy is possible. The focus is on how to make it scalable, safe, and real. Compound AI represents Mobileye’s answer—a layered, modular, and disciplined approach grounded in real-world performance.

Share article

Press Contacts

Contact our PR team