blog

|

January 10, 2026

Prof. Amnon Shashua at CES 2026: Robotaxi updates, breakthroughs in AI, and robotics

Major milestones, meaningful collaborations, advances in robotaxis, novel training approaches and humanoid robotics, Mobileye’s CEO revealed several key updates as the company looks ahead to 2026 and beyond.

These announcements mark a new phase for the company.

Mobileye kicked off CES 2026 with its annual address by Prof. Amnon Shashua, President and CEO of Mobileye.

Taking to the stage, Mobileye’s CEO revealed several key updates as the company looks ahead to 2026 and beyond. The address spanned the full ADAS to AV product spectrum and major milestones, advances in robotaxis with Volkswagen and MOIA, progress in vision-language models and novel training approaches, and finally, Mobileye’s big news of the day, expansion into humanoid robotics through the acquisition of Mentee Robotics Ltd.

Together, these announcements mark a new phase for the company, Mobileye 3.0, in which its leadership in Physical AI is expanded across two compelling frontiers.

Company performance and solutions deployment

Starting with an overview of the data and numbers of Mobileye’s market position, expected revenue, and deployment numbers, Prof. Amnon Shashua addressed significant growth points.

Entering 2026, Mobileye has a projected estimated $24.5 billion revenue pipeline over the next eight years, representing approximately 42% growth from 2023’s projected revenue pipeline of $17.3 billion. During 2025, the company secured design wins with two new OEMs that Mobileye had not partnered with in the past decade and saw nominated volumes for EyeQ™6L grow 3.5x compared to 2024.

As of the end of Q3 2025, Mobileye technology is now deployed in more than 230 million vehicles worldwide.

Surround ADAS is shaping the evolution of driver assist for mass market vehicle production

The keynote highlighted a significant design win, with a major U.S-based OEM selecting the Mobileye Surround ADAS™ platform for upcoming mass-market vehicle production. This win reinforces a trend we see of basic ADAS evolving towards Surround ADAS.

Surround ADAS extends perception and assistance around the entire vehicle using multiple cameras and radars, all processed on a single EyeQ™6H system-on-chip within one ECU. This centralized architecture is designed to enable advanced driver assistance, integrated parking, and hands-off, eyes-on driving capabilities within defined highway operational domains and conditions, while reducing system complexity and cost for OEMs.

MOIA plans to deploy more than 100,000 AVs globally by 2033

Bringing robotaxis safely onto public roads requires an end-to-end ecosystem that supports continuous operation, fleet management, and real-world readiness. Highlighting the ID. Buzz program, Volkswagen Autonomous Mobility CEO Christian Senger, joined Prof. Shashua on stage to discuss how large-scale deployment comes together in practice.

Volkswagen brings industry-scale vehicle production, Mobileye delivers Level 4 autonomous driving through Mobileye Drive™, and MOIA provides the fleet operations and service layer, together forming a complete operational ecosystem around the ID. Buzz platform. Initial U.S. deployments are planned for 2026, followed by broader rollouts in Europe.

Together, this project brings significant scale to the robotaxi project, with testing occurring in multiple locations, under differing weather and climate conditions, from sunshine to rain to snow. According to Christian Senger, by 2033, MOIA aims to deploy over 100,000 self-driving vehicles, that are based on Mobileye's self-driving system.

How AI is being integrated into autonomy

While collaborations bring business to life, the innovation itself continues to accelerate, and AI is very much at the center of how autonomy is evolving. New and more powerful models are emerging, not just for digital-only applications, but for physical systems operating in the real world. But even with extremely powerful models, core AI challenges do not disappear, including known obstacles such as hallucinations, achieving safety assurances, and the need to train at a massive scale.

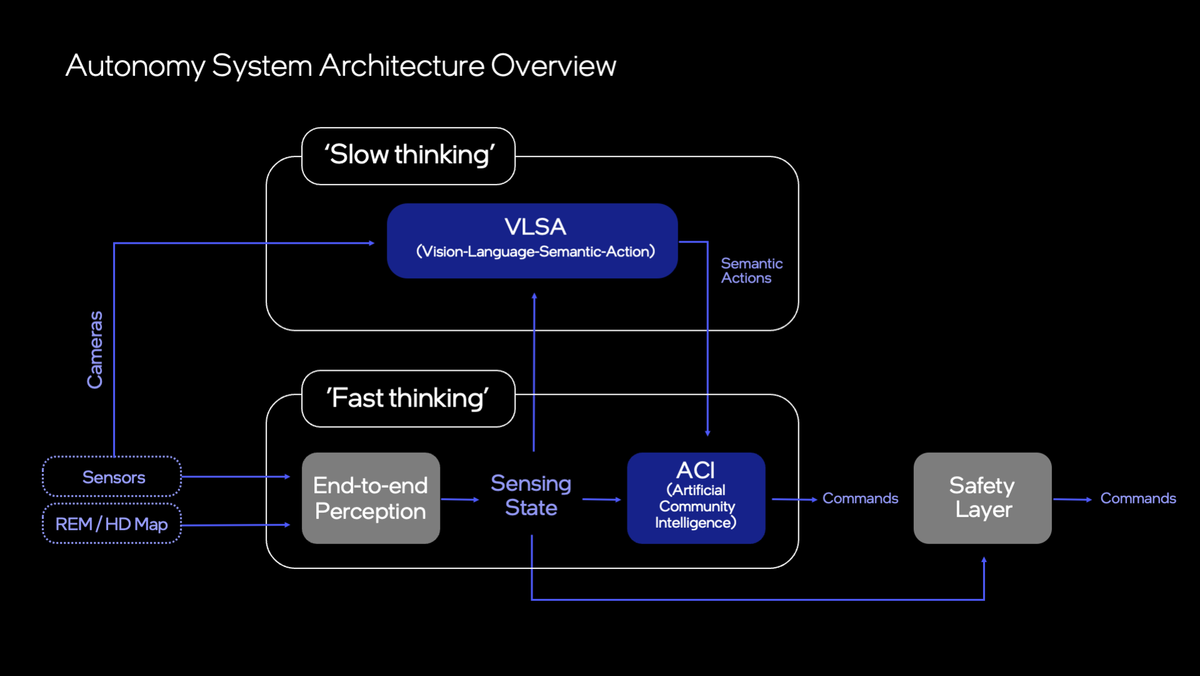

Prof. Shashua pointed to Mobileye’s architectural response to these constraints and how to harness modern AI in compute and power-constrained real-time systems while maintaining exceptionally high accuracy through fast and slow thinking, vision-language-semantic-action and more.

Fast and slow thinking

An important aspect of the approach involves looking at the tasks through a lens of fast thinking and slow thinking. Fast thinking refers to the system responsible for 'reflexive' decisions, at a high frequency rate, including the safety layer. The slow thinking system on the other hand is responsible for the driving decisions that require reasoning about the entire scene, but don't affect safety, and therefore can run at a low-frequency rate.

Emergent driving policy with ACI

At the center of this approach is ACI, Artificial Community Intelligence, a self-play-based framework built on sensing-state simulation rather than photorealistic imagery for driving policy. Using HD maps generated through REM™—Mobileye’s AV mapping technology—ACI places agents such as cars, pedestrians, buses, and other road users onto real road layouts, each with millions of possible driving behaviors. This allows rare, high-risk scenarios to be injected at high density and enables billions of simulated driving hours to be generated overnight.

Vision-Language-Semantic-Action (VLSA)

Vision-language-semantic-action (VLSA) acts as a slow-thinking, vision-language-based model that processes deep scene semantics, almost like an adult accompanying a young driver in complex driving situations. Rather than controlling the vehicle or outputting trajectories, VLSA provides structured semantic guidance that feeds into planning, while safety-critical control remains in the fast-thinking system governed by formal safety layers.

Together, this separation between fast and slow thinking, combined with large-scale simulation-driven training, creates a path to scaling autonomy without placing generative models in the safety loop or relying on human teleoperation to resolve every edge case.

From eyes-on to mind-off: the future of ADAS, AVs, and robotics

Prof. Shashua outlined the industry's evolution across three autonomous driving categories through the 2030s, spanning ADAS, consumer AVs, and robotaxis, all framed around how intelligence can safely scale. L2++ eyes-on systems, currently designed for higher-end consumer vehicles, will continue to undergo cost optimization to enable deployment as a standard feature across broader vehicle segments.

Consumer L3 systems are expected to move beyond today’s eyes-off operation toward L4 mind-off driving, with lower intervention rates and expansion beyond current highway operational design domains. Robotaxi systems, while already commercially deployable, will accelerate through sensor and cost reduction, alongside dramatically lower teleoperation-to-vehicle ratios.

Looking toward 2030, the next major shift in autonomy is the move from eyes-off to mind-off driving, where intervention becomes rare enough to support large-scale deployment.

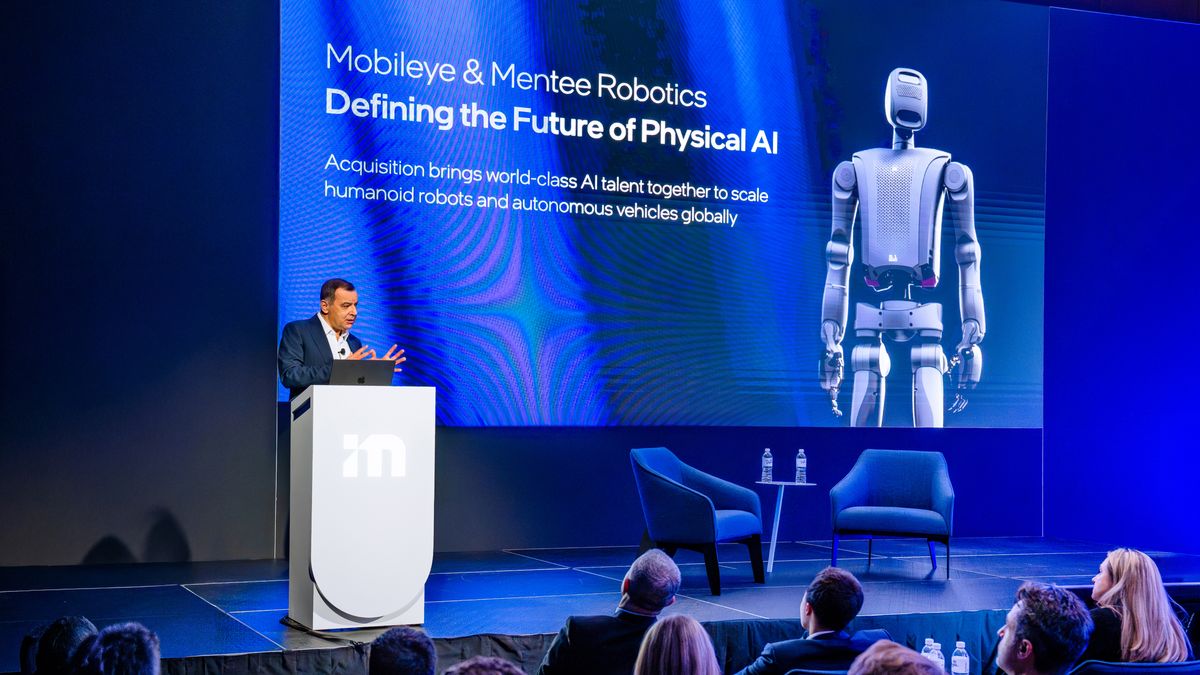

Mobileye and Mentee Robotics: A new frontier in Physical AI

Closing his keynote, Prof. Shashua announced that Mobileye is set to acquire Mentee Robotics, a company developing vertically integrated hardware and software, simulation-first learning, few-shot generalization, highly dexterous humanoid hands, with zero teleoperation.

With this step, Mobileye extends its reach beyond vehicles and into a broader class of intelligent, physical AI systems also built for the real world.

The move reflects a deeper convergence between autonomous driving and robotics, both domains rely on a common Physical Artificial Intelligence stack that spans multimodal perception, world modeling, intent-aware planning, precision control, and decision-making under uncertainty.

For a more in-depth look, watch the entire press conference here.

Share article

Press Contacts

Contact our PR team